When you read the title, did you imagine an injured ax-bearing farmer or a terrifyingly well-adapted cow? The second reading seems literally laughable, of course, but to a computer—less aware than we are that cows lack opposable thumbs—both seem plausible. Both readings obey English grammar after all, and if the title read “Enraged Farmer Injures Cow with Ax,” the second reading would look natural.

Our bovine predicament exemplifies the surprising variety of interpretations that can be given to even tiny snippets of language. The culprit here is what linguists call composition—the process by which individual words get built into larger units like “enraged cow”, “farmer with ax”, and, eventually, entire sentences. Composition poses one of the most fundamental challenges to automating language understanding and also presents some of the most fertile ground in modern artificial intelligence (AI) research.

The importance of doing composition right is growing in proportion to AI ambitions. In the prehistoric 1990s and early 2000s, rudimentary computer intelligences needed only the most primitive grasp of human language. The simplest search engines, if asked for “airport named for a World War II hero” would pop up a list of documents containing the words “airport,” “named,” “world,” “war,” and “hero.” Gone are the larger units like “World War II hero,” and gone is most of the query’s meaning. These days, however, we expect AIs to do things like win Jeopardy. They absolutely must be able to look for an airport with a particular relationship to someone considered a World War II hero.

Fortunately, over the past decade, researchers have made the composition puzzle much less daunting to the computerized mind. The key has been statistical parsing, a mathematical approach to composition that allows the computer to consider a wide variety of possible groupings and to choose between them on the basis of their plausibility.

To figure out the array of possibilities, the computer analyzes divisions of a sentence into larger and larger units, starting with individual words. Think of it as an abstract Lego project where each word is a piece: the pieces can be slotted together in an unimaginable number of ways, but only one combination gives you the spaceship—the rest lead to misshapen wrecks.

Unlike Lego sets, unfortunately, sentences don’t usually come with instruction manuals or blueprints. Instead, computers learn to recognize good sentence architecture by poring over a multitude of correct examples produced by human experts. During this training phase, the computer identifies features of good and bad blueprints, quantifying their goodness and badness in terms of the frequency of each feature’s occurrence in correct and incorrect structures. The end result is a composition algorithm grounded in the statistics of human-produced language. In the bovine example, a well-trained computer would know to build things like “enraged cow”, then piece them together to get “farmer with ax”, “injures farmer with ax”, and, eventually, the whole sentence.

According to UC Berkeley Professor Dan Klein, parsing algorithms work well thanks to a confluence of three factors: sophisticated baked-in linguistic knowledge, effective learning from examples, and careful exploitation of modern computer speed. Klein’s research group has innovated in all three areas. In just the last few years, they have exploited special hardware initially designed for graphics applications to dramatically speed up parsing, and imported ideas from the traditionally separate field of neural networks—algorithms inspired loosely by the architecture of the human brain—to increase the learning capabilities of parsing algorithms. Consequently, his group’s methods define the current state-of-the-art in parsing in both English and several other languages.

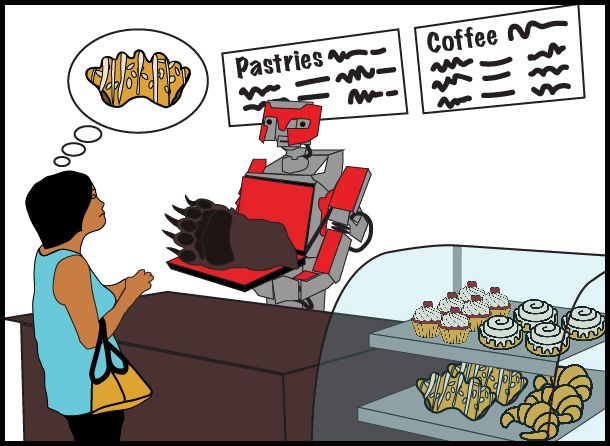

Now, research at Berkeley and beyond increasingly focuses on a harder question: how does the meaning of a group of words get determined by the meaning of each of its parts? “The problem,” says Klein, “is that humans have a lot of context when interpreting a statement that computers just don’t.” For instance, in a bakery, you would likely interpret “bear claw” as a pastry, not a literal bear’s claw. But you can only draw that conclusion based on background knowledge that computers just don’t have access to—or that, when they can access, they aren’t nearly as good at exploiting.

The riddle of semantics—and by extension, the dream of a language-comprehending AI—is therefore intimately bound up with much larger issues involving knowledge about the world and how to inject it effectively into computers. Answering that riddle is anyone’s guess, but one thing seems clear: the search for the answer will keep AI researchers scratching their heads for a long while to come.

This article is part of the Fall 2015 issue.