The dawn of a new breed

One small step for robot dogs, one big step for machine learning

From sightings down on campus to high up in the fire trails, dogs are spotted all around Berkeley as owners take their furry companions on walks. Recently, you may have seen other four-legged friends taking these same journeys—robotic dogs. At two feet tall, robotic dogs are starting to meander around campus under the direction of multiple research groups at UC Berkeley. These research teams are leading us towards a new era of advanced robotics—by teaching the robots to walk using machine learning techniques and the diverse terrain available on campus.

Quadruped, or four-legged, robots from the company Boston Dynamics rose to prominence after videos of them dancing, jumping, and flipping went viral. As well as being fun to watch, the movement capabilities of quadruped robots let them work in harsh conditions that are hazardous or difficult for humans to navigate. However, teaching a machine to walk is not as effortless as these robots make it look—while Boston Dynamics’ videos are short in length, the movements they depict are the product of many long hours of programming.

Through new advances in the world of machine learning, similar robots can now teach themselves mobility, independent from direct programming. These advancements, pioneered by researchers at UC Berkeley, not only increase the applications for the robots themselves by enabling them to adapt to difficult or unexpected terrain, but advance the knowledge base of how robots can learn, ultimately letting scientists develop a deeper understanding of artificial intelligence. It is this knowledge that the research groups of Professors Pieter Abbeel and Sergey Levine, among others, at UC Berkeley are seeking out, each through unique approaches.

Research conducted in Professor Levine’s research group in the Department of Electrical Engineering and Computer Sciences has increased the speed at which robots teach themselves to walk on a variety of surfaces. Robots in Levine’s studies are set free on a wide range of surfaces, including pavement, grass, and memory foam. The robot then learns the specific walking techniques that are needed to navigate a given surface, such as kicking its feet up to free itself from mulch or lifting its feet more vertically to combat the compression of memory foam. The robots constantly monitor their position, stability, and forward progress—forward movements are counted as a success that the robot then learns from and stores in its memory. Cycles of learning are repeated over and over, allowing the robot to go from not being able to walk at all to walking and recovering from falls within 20 minutes. A not-so-furry friend learning to walk through trial and error alone, rather than simply following preprogrammed actions, is a first for the robotics field.

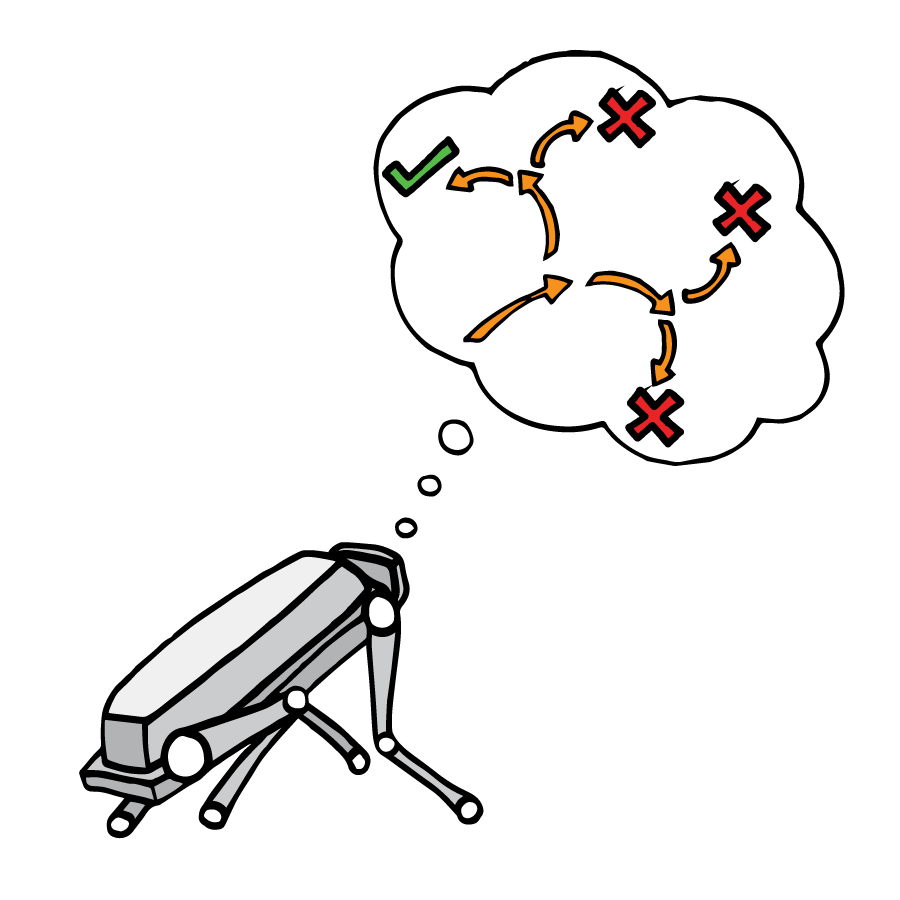

In contrast to the Levine group’s real-world learning method, Abbeel, the director of the Berkeley Robot Learning Lab and co-director of the Berkeley Artificial Intelligence Research lab, and his team have developed a world model to teach their robots to walk. Alejandro Escontrela, a graduate student researcher in the group, explains, “A world model is a model that has inputs which are the robot’s observations [and] also gets conditioned on actions that the robot takes and on previous states of the world model to predict the future.” This approach allows for further improvement in the robot’s abilities because “it gives the experience rollouts that you need to figure out what's right and what's wrong”. A robot stuck in mulch cycles through all its options for escape in its imaginary, programmed world, picks the best one, and then frees itself, all without having to bark up wrong trees in the physical world. Enabling the robots to make their own predictions about the correct action in the imagined space and then act on the best choice in the real world has allowed robots to learn to walk with minimum initial data input, going from being unable to stand to walking consistently after an hour.

Taking diverse approaches to the same task—learning to walk—allow the robots to rapidly increase their know-how. Beyond the “cool factor”, equipping the robots with the tools to teach themselves to walk has both direct and indirect applications. Quadruped robots that can navigate rough terrain and adapt to challenges in their mobility could be especially beneficial for applications like search and rescue, construction, and inspection. However, the knowledge gained through this machine learning research extends far beyond the robots themselves and will ultimately help develop Artificial Intelligence to achieve increasingly complex tasks. These lumbering, stumbling robots are helping create the technology that will propel us into a future of new robotic and artificial intelligence capabilities.

This article is part of the Spring 2023 issue.