All’s (not) fair in data and AI

Addressing bias in machine learning

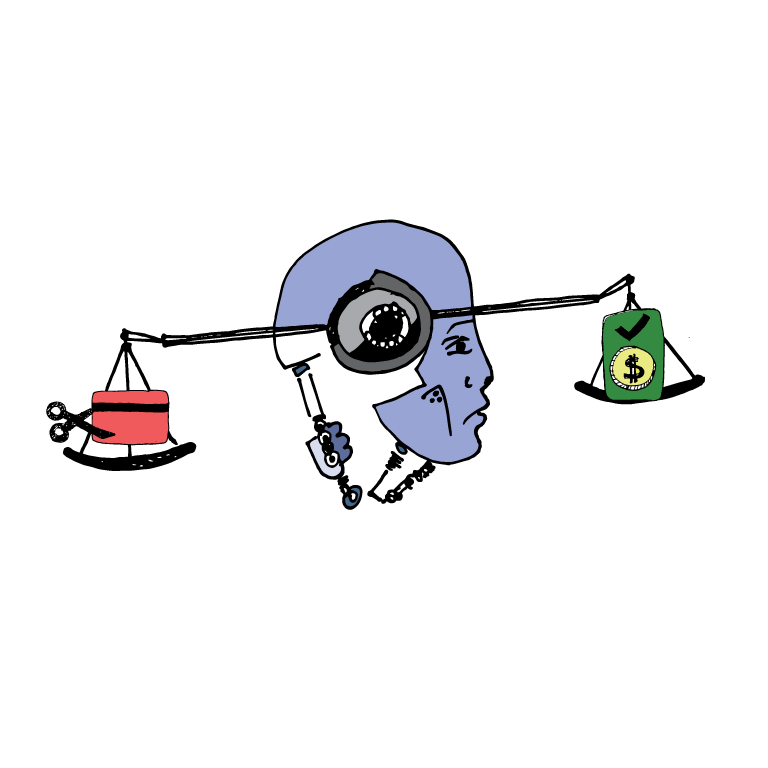

Machine learning algorithms are known for their ability to predict many complex things, and they are slowly being integrated into everyday life. For example, when a person applies for a loan, a specially constructed machine learning algorithm can use information about the applicant (the input) to make a prediction about whether they are likely to default on their loan (the output). In principle, such an algorithm can provide a systematic method for deciding whether a loan should be issued to the applicant, and any bias held by the loan lender against the applicant does not factor into the decision.

However, machine learning algorithms can themselves exhibit biases that cause harmful decision-making. These biases often originate from the ways that engineers train the algorithms to make predictions. For instance, when an algorithm creator uses records of previous loan recipients to train their algorithm, they may unknowingly use records that contain inaccurate biases. For example, biases in skewed historical records can falsely suggest that Black individuals will default on a loan more often than non-Black individuals. Algorithms trained on this data would pick up on, and reproduce, this misleading bias, making it harder for certain individuals to receive loans and exacerbating inequalities.

One way that researchers try to combat these biases is to develop algorithmic “interventions.” These interventions modify an algorithm so that it satisfies chosen fairness criteria—for example, that the algorithm produces the same proportion of false predictions for all races. To evaluate the effectiveness of different interventions, some researchers use benchmark datasets—sets of sample inputs and outputs that are publicly available and shared by a community of researchers—to determine whether an intervention causes the algorithm to satisfy fairness criteria without sacrificing the prediction accuracy. However, these benchmark datasets have limitations of their own, which can result in inaccurate evaluations of algorithm interventions. Researchers at UC Berkeley are investigating ways to mitigate inaccuracies that are caused by dataset shortcomings.

One problem arises when there is noise in the benchmark dataset. For example, the benchmark dataset may list incorrect race information for certain individuals. These mistakes in the data, called “noise,” make it difficult to determine whether an algorithm actually fulfills the fairness criteria. Two PhD students working with Professor Michael Jordan in the UC Berkeley Electrical Engineering and Computer Science (EECS) department, Serena Wang and Wenshuo Guo, wanted to see if they could find a way to ensure that an algorithm fulfills certain fairness constraints, even if the dataset contains a certain amount of noise. “The answer is yes,” says Guo. Wang and Guo devised a method in which the algorithm creator uses additional data to estimate the probability that a dataset feature is incorrect. The algorithm creator then uses the estimated probabilities to construct an additional training constraint that ensures the algorithm fulfills the fairness criteria despite the noise.

Another problem comes from the type of information in the dataset. Frances Ding, a Berkeley EECS PhD student working with Professor Moritz Hardt, investigated a benchmark dataset called “UCI Adult,” which contains information about example individuals, including whether an individual earns more than $50,000 per year. This dataset is commonly used to evaluate algorithmic interventions for estimating income, but Ding and her collaborators found that the dataset can produce misleading conclusions. They realized that when the UCI Adult dataset was constructed, most people—particularly Black individuals and women—earned less than $50,000 per year. This skew in the distribution created a bias in the dataset, and any algorithm trained on this dataset was particularly likely to predict that an individual earns less than $50,000 per year.

“Because certain modifications to the dataset can sometimes result in different conclusions about the biases of a particular algorithm, it would be nice to have many benchmarks for algorithmic fairness,” Ding noted. To begin to address this shortcoming, Ding and her collaborators used publicly available US Census data to add more types of information to the UCI Adult dataset, providing algorithm evaluators with more options for accurately measuring algorithmic bias.

Reducing biases in machine learning algorithms is a complex process, but these UC Berkeley researchers hope that improving methods for how biases are measured and found will be an important first step in the larger challenge.

-------

Cathy Chen is a graduate student in electrical engineering and computer science

Design by Chenyu Zhang

This article is part of the Fall 2021 issue.