Making the cut

How technology makes better surgeons

Surgery is a difficult task. Many times, procedures do not go according to plan even for the most experienced of surgeons. As you might have seen in medical dramas, doctors rush to make quick and lifesaving decisions as a routine surgery suddenly takes a turn for the worse. These moments might be dramatized for television, but they show the intense pressure that medical personnel face when they take responsibility for the lives of their patients.

Doctors are not perfect. Even if they know a surgical protocol well, they can easily get tired during long surgeries and become more prone to error. How can we amplify the capabilities of doctors to make their lives easier and our medical outcomes better? Superior training tools, dexterous robots, and artificial intelligence (AI) all have their place in the future of medicine.

Phantom of the opera-tion

Medical students and new surgeons learn by doing. But with rare or experimental surgeries hands-on training isn’t always an option.

Dr. Neil Long, an emergency physician at the Burnaby Hospital in British Columbia, Canada does surgical research focused on pericardiocentesis, a procedure done to remove fluid that has built up around the heart. At best, this excess fluid can cause the heart to malfunction. At worst, the fluid pressure pushes down on and squeezes the heart, making it stop entirely.

“It’s a fairly rare procedure,” Long explains. “The classic mantra of surgery is ‘see one, do one, teach one,’ and the opportunity to practice on patients in the emergency department for this procedure is far less controlled.”

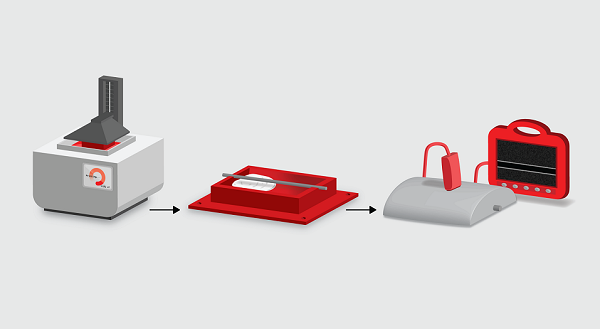

Medicine, however, does not look kindly upon blindly inserting needles into chest cavities. An alternative to “see one, do one, teach one” is a skill trainer, called a phantom. Phantoms are physical models, which do not necessarily look like realistic organs, but mimic their properties. Surgical phantoms often serve as a proxy to help surgeons learn the rudimentary skills needed before working on actual patients. In real life, the surgery will be new, but because trainees have worked with a phantom, they can go in with more confidence in their abilities.

Molds for low-cost phantoms are 3D printed (left) and filled with gelatin or other materials that mimic tissue (middle). In this example, the resulting object approximates the imaging conditions of an arterial ultrasound procedure (right). Credit: Gautam Gunjala

Molds for low-cost phantoms are 3D printed (left) and filled with gelatin or other materials that mimic tissue (middle). In this example, the resulting object approximates the imaging conditions of an arterial ultrasound procedure (right). Credit: Gautam Gunjala

Such tools seem almost necessary, but cost plays a major factor in accessibility. A surgeon who wants phantoms for a range of surgical procedures would require a menagerie of them since different phantoms are used for different parts of the body. With regards to pericardiocentesis in particular, “there’s an existing phantom on the market that’s $3,000,” Long recounts. “But everyone’s got a tight budget.”

With many of the phantoms on the market for pericardiocentesis doing a poor job of truly capturing the surgical procedure, it makes it hard to justify the cost. As a result, medical researchers are turning to large corporations for help finding ways to take advantage of new technology and bring prices down. Long maintains a strong research relationship with collaborators at Microsoft. Not only does the company bring its considerable resources, but it also provides fresh eyes for old problems.

Recently, Microsoft engineers worked with the Burnaby Hospital to design a prototype that is cost efficient and easy to scale into other teaching institutions. “At least one version of the phantom is as cheap as possible. It’s a relatively small cost that people can afford,” Long explains.

Interested surgical trainees would simply need to download code and pay someone to print the phantom’s molds on a 3D printer. Materials that mimic tissue are then added to the printout making a better phantom model than what is currently on the market. As the field of 3D printing advances and 3D printers become more affordable, a world in which medical students can practice life-saving procedures without the stress of operating on a real person appears more realistic.

A call to arms: robotic surgeons

Surgeons are also turning to robotic surgery in order to enhance their skills. “Surgeons’ acceptance of robots falls into several camps,” explains Dr. Michael Mastrangelo of Bend Surgical Associates. Mastrangelo was an early adopter of minimally invasive laparoscopic surgery in the 1990s and then robotic surgery when the technology became available. “Some feel that robots shouldn’t be used at all, and others believe that robotic surgery is where surgery is eventually going.”

Many surgeons were hesitant to move into the age of robotics—robots are bulky, require a protracted setup, add additional cost to the patient, and require a hospital setting as opposed to an outpatient surgery center. As technologies improved, however, it became evident that robotic surgeries could outshine laparoscopic ones in several cases, including prostatectomies, colon resections, and hysterectomies. Furthermore, certain “open surgery” procedures could now become minimally invasive ones. Mechanical improvements were a major factor.

“Imagine you’re trying to tie your shoes with your wrist locked,” says Mastrangelo. “That’s what laparoscopic surgery is like. Laparoscopic instruments don’t have wrists, but robotic instruments give you additional freedom to move—more of an intuitive natural movement when you’re tying a knot and suturing.”

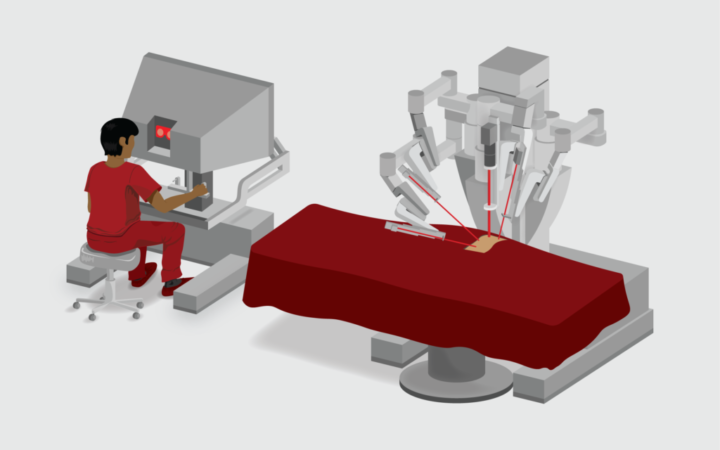

In the last two decades the invention of new surgical robots has made the promise of robots in the operating room into a more tangible reality. The da Vinci Surgical System is behind the famed viral video of “grape surgery” where it successfully reattaches the peel of a tiny grape. Designed to perform delicate and minimally invasive movements, the da Vinci System is quickly gaining traction in many hospitals.

In current clinical practice, a surgeon uses the console of the da Vinci System to perform surgical tasks using interactive robotic arms. Artificial intelligence researchers aim to enable this system to perform some tasks without console input. Credit: Gautam Gunjala

In current clinical practice, a surgeon uses the console of the da Vinci System to perform surgical tasks using interactive robotic arms. Artificial intelligence researchers aim to enable this system to perform some tasks without console input. Credit: Gautam Gunjala

The da Vinci System is operated by a surgeon, who sits at a console looking at an image of the surgical field. The surgeon controls four interactive robotic arms, which perform the surgery. Surgeons like it for many reasons, including improved visualization, dexterous flexibility, and the ability to stay seated throughout the surgery to reduce fatigue.

Even though the surgeon is not standing above the patient, there continues to be a team in the operating room. Communication systems are in place so that surgeons can talk to their operation team. And in the rare case that an open procedure becomes necessary, the da Vinci System can come off very quickly.

Robotic trainers

The da Vinci System can also be outfitted with two consoles, so two surgeons can operate it simultaneously. A trainee may be seated at one console and an experienced surgeon at the other. If the trainee performs a movement that could cause a surgical issue, the experienced surgeon can take control of the system.

“It’s a great tool for training,” says Mastrangelo. “When training in laparoscopic surgery you essentially give up the reins. There’s a safety factor in having the ability to have more than one console. A lot of [surgical] metrics come out of the da Vinci, too. You learn a lot about how you operate and can work to improve on becoming a better surgeon.”

The system even allows for virtual input. A surgeon in another facility, perhaps thousands of miles away, can sit in on surgeries with trainees, observe their techniques, and make recommendations. When today’s trainees are full-fledged doctors, an AI may be the one observing their techniques and making recommendations on how to improve. But before these computer algorithms can evaluate surgeons, they must be trained in surgery. To do so, an AI uses machine learning, the ability of computers to automatically learn and improve through experience.

In supervised machine learning, an AI learns from a “training” dataset. The AI is given “inputs”—data like videos, pictures, and numerical values—and the “ground truth” or the set of correct predictions the AI is to make based on the inputs. The process is similar to the way students study with an answer key in the back of a textbook. This key is made by the authors, who are certain of what the answers are. Students try to solve these questions by plunging into the problems directly, then checking if their responses match with the answer key at the back. They know they are solving the problems correctly if the answers they are coming up with consistently match. If not, they try to reformulate their theories and try again so the answers do conform.

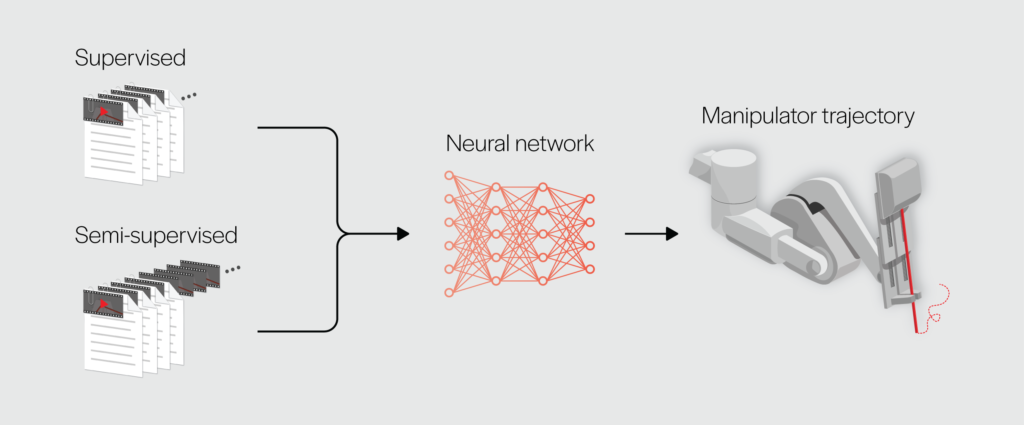

In supervised learning, data (video frames) and annotations (kinematic measurements, gesture labels) are used to train a neural network to plan a manipulator trajectory for a task. In semi-supervised learning, not all video frames are annotated. Credit: Gautam Gunjala

In supervised learning, data (video frames) and annotations (kinematic measurements, gesture labels) are used to train a neural network to plan a manipulator trajectory for a task. In semi-supervised learning, not all video frames are annotated. Credit: Gautam Gunjala

Translational researchers, who bring bench science to bedside medicine, have found that the da Vinci System can serve as a tool to generate training data that can be used to improve both AI and medical outcomes. Researchers at Johns Hopkins University’s Intuitive Surgical Link used the robotic surgeon to create the Gesture and Skill Assessment Working Set, known as JIGSAWS. JIGSAWS was initially created as a training dataset for AIs that evaluate the performance of surgeons during suturing. Eight surgeons of different skill levels used the da Vinci System to complete tasks thought to be the core of the surgery: suturing, knot tying, and needle passing. The dataset includes videos of the robot’s arms in action and kinematic data describing the arms’ motions. In addition, each video has corresponding manual annotations that break down the tasks into discrete actions and that rate the surgeon’s performance. Experienced surgeons helped the Johns Hopkins team produce the annotations. AIs using JIGSAWS as the team intended will treat the videos and kinematic data as inputs and the manual annotations as the ground truth. These AIs will learn to break future surgical videos down into distinct surgical tasks so that they can then evaluate and rate the skill-level of the surgeon.

Artificially intelligent surgeons

Drs. Ajay Tanwani and Ken Goldberg, researchers in the Industrial Engineering and Operations Research Department at UC Berkeley, found a different use for JIGSAWS. Their team, along with collaborators from Google and Intel, developed the Motion2Vec algorithm that can learn how to operate the da Vinci System by watching human surgeons. The dataset’s focus on suturing was fortuitous. As it’s one of the most foundational tasks in surgery, being able to offload suturing to a surgical AI would allow surgeons to focus on more technical and unpredictable surgical tasks. “Suturing is something that is very tedious,” explains Goldberg. “It’s delicate work, but we’re trying to push that envelope slowly forward.”

The problem is that JIGSAWS provides a limited amount of data, and experiencing many surgeries would produce a better surgical AI. Along the same lines, access to varied training cases makes better AI surgeons. The AI may need to choose between various surgical actions, such as needle insertion, needle handoff, or needle extraction. A good medical AI would overcome these issues by being trained on examples from all possible scenarios.

This makes the amount of data required to train a medical AI enormous. Data must not only be supplied in such a way that the algorithm understands it, but also in large enough quantities. That sort of information cannot come from a few minutes of video or a few pictures. Hundreds of hours of data must be spliced together. The best results would come if all of those data were annotated, but annotations take time.

The JIGSAWS dataset contains videos captured by the da Vinci System during surgical processes, supplemented by two types of annotations. Kinematic annotations descibe the positions and velocities of the surgeon-side and patient-side manipulators at each video frame. Surgical activity annotations break down a complex procedure into sub-tasks which are associated with sequences of frames. Credit: Gautam Gunjala

The JIGSAWS dataset contains videos captured by the da Vinci System during surgical processes, supplemented by two types of annotations. Kinematic annotations descibe the positions and velocities of the surgeon-side and patient-side manipulators at each video frame. Surgical activity annotations break down a complex procedure into sub-tasks which are associated with sequences of frames. Credit: Gautam Gunjala

Dr. Danyal Fer, a resident in the University of California San Francisco East Bay Surgery Program, emphasizes, “Surgeons who are working eighty hours a week find it hard to sit down and accurately annotate what is occurring in a surgical scene [to assist with supervised learning]. Any method you can create to generate a dataset more quickly and accurately is going to be helpful in this space.”

To develop Motion2Vec with limited help from surgeons, the UC Berkeley team trained their algorithm on JIGSAWS using “semi-supervised learning.” They fed Motion2Vec a small number of videos labeled with the information the robot needed to imitate the actions in those videos. To supplement the annotated videos, they also fed the algorithm video data without labels. It was up to Motion2Vec to group unlabeled videos together with similarly labeled video data so that it could amass enough examples to closely imitate the recorded surgical actions. If the algorithm grouped videos incorrectly, the movements it produced would fail to match the ground truth, so it would be forced to reevaluate itself and adjust its groupings.

Motion2Vec is not going to be launched into da Vinci Systems in the operating room anytime soon, but the research group has come up with some promising results. Their AI was able to mimic the movements of skilled surgeons with an accuracy of 85.5 percent, suggesting that the robot can reproduce the motion of a surgeon relatively closely.

Yet, there are still limitations in training an algorithm on JIGSAWS. “These annotations take place in a controlled environment,” explains Fer. “In the future, you must be able to accurately describe what the surgeon is doing in an operation with far more detail, in a much more complex, and a much less controlled environment.”

Learning from less controlled datasets is not an easy task for an AI. Recognizing a surgeon’s skilled hand movements and imitating them requires a thorough understanding of not just the motions themselves, but also differences in lighting, background, and other geometric properties. That makes it important to characterize factors such as position, size of external objects, and the viewpoint of the camera. Still, if researchers can pull it off, the possibilities are limitless. “The world around us is filled with information in the form of videos, images, and text,” says Tanwani. “If [AIs] are to make sense of this information—similar to how humans perceive and act in their environments—[they] can be of tremendous value in everyday life.”

Ethics of artificial intelligence in biomedicine

Although artificial intelligence is steadily becoming more common in medicine, it will not be taking your doctor’s job any time soon. A precise algorithm does not replace a seasoned physician. Writing an AI is a highly specialized task which only covers a small fraction of the patient cases and conditions. Doctors and surgeons are capable of looking at hundreds of things at once and drawing conclusions based on a patient’s medical and familial history, not just physical symptoms. There will not be an AI in the foreseeable future that can equal a doctor.

“The first real tangible milestone of [AI]-assisted surgery would be to have robots that are semi-operated,” Tanwani explains. Surgeons would be in complete control of the robot, but the AI would show them a way to correct erroneous movements. “If we have the right quality of data available,” says Tanwani, “we can incorporate [these AIs] as standard models that can be used for training and assisting novice surgeons, as well as providing feedback to experts on how to control these robots.”

A full rollout of AI in the surgical force would have serious implications for patients and clinicians alike. Some ethicists believe that the AI must do a statistically better job than the average medical professional. Others take a stronger stance that AI must do no harm. “The debate parallels that of self-driving cars,” comments Fer, “There’s a good chance a self-driving car is better than your average driver. But every time a self-driving car has a problem, it is a big deal.”

From a societal level, it’s unclear what errors would be acceptable from a machine as opposed to a human. Patients may look at it from a place of accountability: who is responsible for surgical or diagnostic error if things were to go awry? In such a situation, does liability fall to the surgeon overseeing the surgery, the physician supervising the diagnosis, the hospital in which the surgery takes place, the doctors involved in creating the training dataset, or the company which created the AI?

“The errors you see in an autonomous system are going to be different from the errors you see occur in a human-driven system,” Fer explains. “If I’m suturing a blood vessel, I might not make all the sutures evenly spaced but an autonomous system could completely misread the environment—suturing a centimeter off in a completely different part of the blood vessel.”

“We do not anticipate replacing surgeons altogether,” concurs Goldberg. “I don’t think robots are capable of replacing people. But they can make people’s jobs better—just like driver-assist makes drivers better drivers, surgeon-assist would make surgeons better surgeons.”

It is possible AI and surgical robots in the operating room will one day factor heavily into the surgical process. There is perhaps even a chance these technologies will feature as a storyline on your favorite medical drama. From correcting erroneous movements to preventing tragic accidents, there is immense promise in using technology in the operating theater. Scientists and clinicians are working hand in hand to create a surgical field that is safer for patients and less risky for doctors. Modern tools including phantoms, AI, and robotic technology are at the disposal of surgeons—giving them confidence before making the cut.

This article is part of the Fall 2020 issue.