In 1960, mathematician Norbert Wiener wrote an article in Science titled “Some Moral and Technical Consequences of Automation.” This article was the seminal work that began asking ethical and philosophical questions about the field of scientific inquiry known as artificial intelligence (AI); the quest to build machines that can think and reason about the world. Wiener outlined the concerns and challenges that engineers and researchers would face as machines began to exhibit properties more commonly associated with humans. These included abilities such as playing games like tick-tack-toe, chess, and checkers, but also actions. As Wiener wrote, these actions would “take into consideration not merely the moves as they occurred in the individual game but the record of games previously played.”

At the time, the article and its related work set off the study of cybernetics, a research endeavor that brings control theory, computation, logic, and biology together into a cohesive theory of intelligent systems. Although intelligence itself was an ill-defined construct, the field began with a hope that what we describe as human cognition could be encoded in a machine.

Cybernetics took root at UC Berkeley. Professor Lotfi Zadeh, who taught computer science at UC Berkeley, introduced ideas such as fuzzy logic, an intersection of formal logic and probability theory to create “fuzzy” interpretations of the world. An example of this logic is an object’s state of being hot or cold; Zadeh argued that this is not a binary property, but occurs along a gradient. Such “fuzzy” descriptions of the world allowed for more nuanced interpretations. Meanwhile, Professor Alfred Tarski, who taught mathematics at UC Berkeley, studied the foundations of modern logic, the study of truth, and the axioms that make up mathematics. Wiener’s proposals regarding artificial intelligence required contributions from multiple disciplines because solving these new logical operations could not only signal a significant shift in human capabilities, but could also change the relationship that we have with technology. Rather than relying on technology that operated on a set of pre-programmed rules, the end goal was to develop systems that could operate on a flexible kind of logic that could adapt and learn.

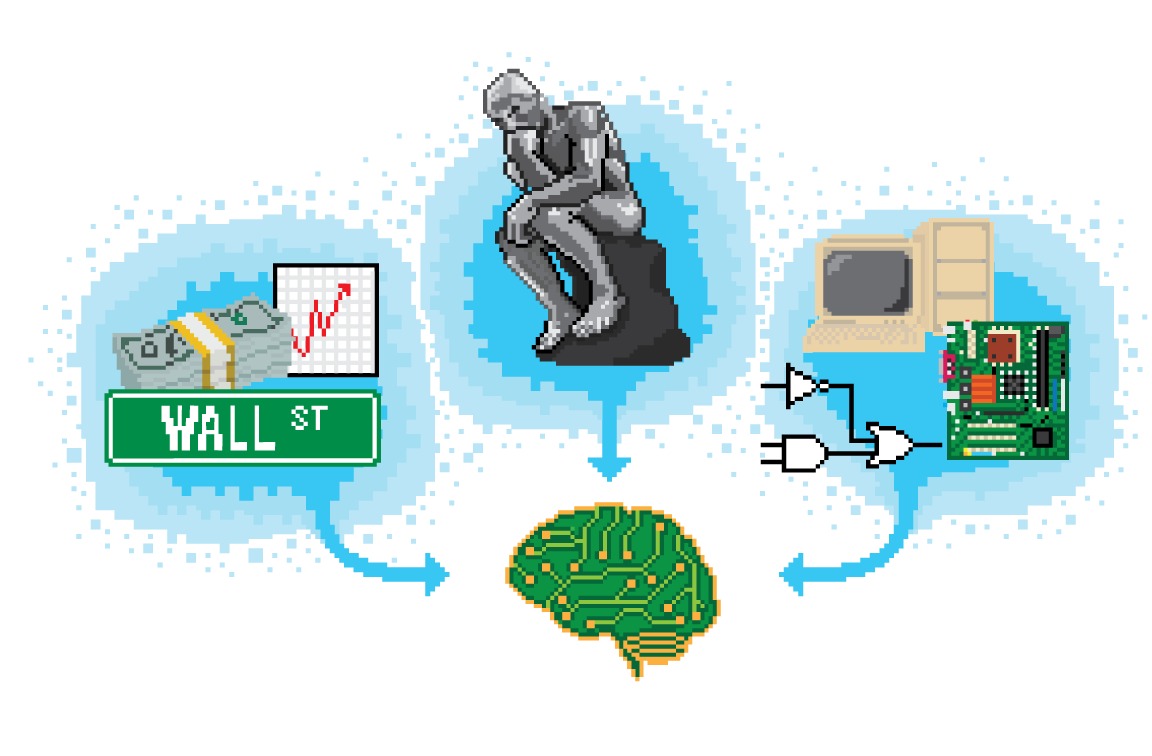

As the decades went on, artificial intelligence researchers identified specific problems to solve in order to achieve logical thought in an artificial system. These problems included classification (categorizing objects into groups), decision-making (choosing between a set of alternatives), and prediction (forecasting the evolution of a system). The study of these problems brought together disparate fields like computing, economics, and philosophy.

Modern artificial intelligence exists at the intersection of many diverse fields, such as economics, philosophy, and computer science.

Modern artificial intelligence exists at the intersection of many diverse fields, such as economics, philosophy, and computer science.

At UC Berkeley, research centers such as Berkeley AI Research and the Center for Human-Compatible AI, as well as professors and students across departments like Electrical Engineering and Computer Sciences, Economics, and Philosophy have taken up the grand challenge of understanding the structure of intelligence to build real-world systems that exhibit properties like reasoning and cognition while also ensuring that their impact on the world is inherently good.

Economics: a strategic approach to complex systems

As researchers attempt to build computational systems with increasing capacity to impact the world, questions arise about the moral and technological challenges that accompany these processes. Today, these challenges range from designing autonomous vehicles that ensure the safety of both drivers and pedestrians to developing content recommendation services that reach hundreds of millions of people. Is there a way to build systems that are guaranteed to impact society positively? This very question, however, brings further uncertainties with it. To start, there is almost no universally acknowledged description of “beneficial” in complex societal systems. For example, social networks connect large groups of people, but they also pose serious challenges through the rapid spread of misinformation. And, as an extension, even if there were a way to systemically define benefit and loss in a societal context, how could it be guaranteed that the system being designed was beneficial?

Enter economics. The beginning of a complete theory of provably beneficial intelligence has its roots in understanding human preferences. Ideally, any autonomous intelligent agent should understand human priorities and preferences and be able to reason about them. However, human preferences have traditionally been regarded as fundamentally unobservable, as attempts to measure human preferences are limited to self-reported responses that may not adequately consider outside and subconscious influences.

The formal study of human decision making began in the 1940s with the work of John von Neumann and Oskar Morgenstern, who put forth the beginnings of modern microeconomics. They studied the cases under which a decision made by an agent could be said to be “rational.” The modern foundations of economics evolved in parallel with computer science and studied the allocation of scarce resources and decision-making by humans under various scenarios. In particular, the field of game theory (the study of strategy) originated as a response to problems that involved not only a single decision-maker, but also multiple humans strategizing with or against each other. Economic theory extended models of the preferences of a single human being to the study of aggregate populations of people.

A principal-agent problem refers to an interaction between one human and one artificially intelligent system.

A principal-agent problem refers to an interaction between one human and one artificially intelligent system.

Similarly, building intelligent systems can roughly be divided into what are called principal-agent problems and multi-agent problems. Principal-agent problems ask questions associated with a single human-being interacting with a single autonomous system. Multi-agent problems broaden this inquiry to either multiple humans or multiple intelligent systems.

In a multiple-agent problem, AI interacts with multiple humans or other intelligent systems.

In a multiple-agent problem, AI interacts with multiple humans or other intelligent systems.

Computer scientists have borrowed ideas from economics to develop models of human decision-making in large-scale societal systems. These include the study of congestion patterns in traffic and autonomous driving, efficiency of markets and networks, and the structure of institutions. These real-world systems are important because autonomous systems with many interacting components have properties that are unintuitive. Scientists can therefore use models from economic theory to describe decision-making in these complex systems.

Choice and morality

One of the key challenges to understanding human decision making is that our preferences do not occur in a vacuum but are instead shaped by external factors. When an autonomous system attempts to learn human preferences, it may end up shifting or distorting them. In the Department of Philosophy at UC Berkeley, Professor Lara Buchak studies the foundations of rational choice and how we respond to suggestions. Economic theory attempts to identify how human beings make decisions, and rational choice is the long standing theory that underlies decision making. Buchak’s 2013 book Risk and Rationality focuses on the choices that human beings make and suggests that the expected utility theory—a predominant theory suggesting that humans do not always select the option that yields the highest personal reward—is incomplete without a more thorough understanding of risk.

Buchak starts her book with the question: “What is good for some group of people?” Uncertainty arises when we try to balance freedom of choice with improved outcomes in situations with many unknown variables. Buchak then provides an example: “If we are both choosing between apples and oranges, and I choose the apple because I like apples better than oranges, but you choose the apple because you think it would be really impolite of you to choose this delicious orange, we have made the same choice but for different reasons.” It is entirely possible in this scenario that preferences do not correctly map to choices at all.

Researchers are now trying to translate the uncertainty of human preferences to artificial intelligence. Professor Stuart Russell’s group from the Department of Electrical Engineering and Computer Sciences (EECS) is taking on the challenge of human preferences. They’re specifically applying the ambiguity of human preferences to robotic systems. Russell brings up a distinction between what he calls the “standard model” of AI and the modifications he proposes. The standard models of AI perform a small number of computations on a limited number of inputs and have largely hard-coded objectives that are specified by the human that designed the system. However, with the development of the field of deep learning, it is now commonplace to do many computations on each input.

“You can think of a deep learning system as being a very large circuit that represents a complicated function,” Russell says. “It is very hard to connect deep learning systems to a database, and in practice we need them to acquire a huge amount of data to achieve functionality to accomplish a task.” Deep learning systems are typically composed of neural networks, which are essentially a series of algorithms processing a numerical representation of some input, connected with values called “weights” that are adjusted to indicate how inputs should be converted into the desired outputs. In effect, neural networks are general purpose tools that can approximate arbitrarily complicated functions that map inputs to output behavior. They are useful because of the wide range of behaviors they are able to exhibit. However, they also have many drawbacks.

Russell mentions that humans have “a vast library of hierarchical, behavioral structures,” which can be deconstructed into individual physical actions. He notes that humans also build plans at “different levels of abstraction,” but “where those hierarchies come from is an open problem.” Russell’s current main area of research is the difference between the standard model of AI and what we want intelligent systems to do. The problems we see with typical AI models occur because we do not know how to specify objectives correctly. Because the standard AI model can only perform based on pre-programmed behaviors, if an intended objective was not properly included in the original code, the AI will not have the capacity to adjust its outputs to the correct behavior.

The lack of ability to adjust to new situations highlights the problem of objective misspecification in artificial intelligence. Say, for example that we have an autonomous robot that is built to bring us coffee. The only objective that is encoded in the robot is to deliver coffee to the human being, and the robot proceeds to fulfill this objective to the best of its ability. However, being completely unaware of the state of the rest of the world, the robot decides that the most efficient way of getting the most amount of coffee to the human being is to engage in destructive, criminal behavior and rob the nearest coffee shop. Russell calls this response the “King Midas problem,” harking back to the Greek myth of a king who wished that everything he touch would turn to gold but forgot to make exceptions for things he did not want to be turned into gold.

If one were to order coffee from a robot unaware of human preferences, that robot could cause some damage accomplishing its singular objective.

If one were to order coffee from a robot unaware of human preferences, that robot could cause some damage accomplishing its singular objective.

This example highlights the problem of runaway effects that can occur due to objective misspecification when building autonomous systems. The fact of the matter is, a robotic system does not have context about human morality, normative preferences, or the idea of value. These are completely external entities to the central problem that any modern intelligent system fundamentally solves, that is, maximizing the reward of accomplishing a task while minimizing the cost of performing the task.

Russell’s group has been working on devising a new theory of intelligent systems that relies on leaving the human preferences uncertain to the robot. It would seem to make more sense to inform the robot of all necessary information about the human user so they make as few mistakes as possible. However, the effect of Russell’s approach is that the robot is forced to learn about instances in which it is misbehaving or not otherwise fulfilling the human intent that it was designed for. Furthermore, by having this ability to learn, it can go on to figure out preferences that may have been otherwise missed in the original coding of the system.

Rohin Shah, a researcher in Russell’s group, works on the foundations of having robots understand human preferences and execute actions that are aligned with human intentions. Shah works on problems that involve robots inferring human preferences from actions. His research on correctly identifying preferences has helped formalize how robots should make decisions. Shah explains, “Human civilization has come this far because humans have the ability to learn from others and build on their work.” Outside of his research in Russell’s lab, he started the AI Alignment Newsletter “as a sort of low-effort precursor to a textbook: it makes it easier for researchers to sift through all of the work being done to find whatever is most relevant to them.”

Dylan Hadfield-Menell, a researcher in the Berkeley AI Research lab and Center for Human Compatible AI, also works on aligning the preferences of robots and humans. He introduced an early model in 2016 called “Cooperative Inverse Reinforcement Learning,” which is an attempt to formalize how agents can identify what each other wants cooperatively. In the case of designing AI systems, Hadfield-Menell explains that the term “provably beneficial” comes from “systems that are working with people to identify what their goals are, manage their uncertainty about what those goals are, and take actions that are provably aligned with their interests.” Hadfield-Menell also studies the potential failure modes of current AI systems and cites the example of content recommendation. “Companies optimize over engagement, a measure of how long you spend on the site, [and] use a general purpose optimization algorithm with policies for showing content that you are likely to interact with,” he explains. Although this seems, “at first glance, a very reasonable thing to be doing, there are many negative externalities,” he says. These externalities include the spread of misinformation on social networks, as well as a proliferation of conspiracies and other targeted content.

Verifying the quality of systems

As researchers develop systems to assist in our lives, they must formally verify the benefits of such systems in order for the systems to be adopted by the public. Having intelligent systems that can do their job well only in certain controlled scenarios is unsatisfactory for many critical applications. Consider autonomous vehicles, which are an important class of intelligent systems already commonly employed by the public. Engineers must prove they meet safety requirements and other critical characteristics. Much of the research into intelligent systems at UC Berkeley focuses on proving properties of these systems.

Professor Sanjit Seshia’s lab in the EECS department studies formal methods and proofs to verify autonomous vehicle behavior and ensure safety for users. Seisha defines verified AI as “the goal of achieving high-assurance, and ideally provable AI.” Recent AI systems are able to learn from experience, and researchers need to design intelligent systems with properties that can be mathematically proved as reliably true.

The more data is input to an AI system, the more the system can be “trained” to navigate human preferences.

The more data is input to an AI system, the more the system can be “trained” to navigate human preferences.

Seshia proposes that instead of asking, “what kinds of properties should these systems have?” researchers should ask, “what formalisms will allow us to express properties that users may want to write?” In order to create beneficial systems, designers need a way to mathematically and computationally express the intended behaviors of the system—this is known as the “specification problem.”

It is also important to extend the properties from logic to deep learning models; for example, understanding the semantic meaning of a request. Seshia explains, “Suppose there is an AI system that takes as an input a picture of a red car and is instructed to draw a bounding box around the car. If the color of the car changes from red to yellow, the pixels in the image have changed drastically, but the semantic meaning of the object should stay the same.” This type of robustness is very desirable in designing AI systems for a world that is dynamic, changing, and uncertain.

Seshia also notes that we need to be very careful about the software engineering and design process for machine learning and AI algorithms. “One of the core places where the design of machine learning algorithms is different from other systems is that the data set determines so much,” he explains. Historically, the focus in designing systems was the design of the hardware, circuit, or architecture of the system. However, with machine learning and AI systems, there is a two-step procedure which involves training and testing. Training is the process in which data is fed to the system to determine the optimal parameters for the system to use. After the system is trained, the system goes through testing, in which it makes predictions on previously unseen data and is evaluated on its performance. Thus, the models that researchers are developing can only be as good as the information they give them. This process means a system with extensive data on human behavior will outperform a system that lacks such data.

Challenges and growth

As intelligent systems become ever more pervasive in society, it is becoming more and more important to consider safety and reliability of these systems as a critical design parameter. For example, having safety assurances for autonomous vehicles is very important in being able to properly account for accidents. Dr. Jaime Fisac, who worked on this topic during his PhD at UC Berkeley in the EECS department says, “Many of the data-driven, learning-based techniques that we use for control and decision making do not have guarantees.” Fisac also notes that “some of the formulations from learning we are bringing into decision-based systems come from a field in which safety-critical systems are not a priority.”

As AI becomes more ingrained in our world, it will become increasingly important to standardize tests to ensure the safety of AI systems and their benefit to society.

As AI becomes more ingrained in our world, it will become increasingly important to standardize tests to ensure the safety of AI systems and their benefit to society.

Typically, reinforcement learning systems focus on optimizing for some average case outcome, but Fisac is interested in constraints on the system beyond this type of naive optimization. These could include safety constraints in vehicles or parts of the physical world that a robot should not be able to access in order to remain safe. Fisac has also studied the ways in which autonomous systems interact with human beings, especially in a “game theoretic” setting, in which humans respond strategically to a system’s actions. One challenge is to reason through the mutual strategic influence between the intelligent system and the human user. “In practice, people have expectations about the autonomous system and expectations about each other,” Fisac notes about the interaction.

Humans tend to underspecify their objectives and thus, when building systems of any complexity, we have a responsibility to show formal verification and correctness guarantees of the system. Already in daily life, we are seeing the impacts of systems that do not fit these specifications. Social networking websites like Facebook and Twitter have drawn plenty of criticism for their recommendation algorithms that optimize for engagement but at the same time seem to take away the autonomy of the user. And in the development of autonomous vehicles, there were reported cases of human fatalities when vehicles misclassified situations and did not stop for pedestrians when they should have.

Norbert Wiener’s vision of a feedback loop that generates intelligence has come a long way since the 1960s. The field has gone through many iterations and is poised to make an impact on the ways that we make decisions and understand preferences. This pursuit of studying human decision-making has led to the field internalizing and exploring ideas from many disciplines, including logic, economics, and of course, computer science. As AI systems make their way slowly from laboratories into the real world, engineers and researchers are striving to build robust, formally correct machines and algorithms. The introduction of modern learning technologies into everyday applications will require a more reliable theory of intelligence that is in line with human interests.

Kshitij Kulkarni is a graduate student in electrical engineering and computer sciences.

Design: Santiago Yori

This article is part of the Spring 2020 issue.