You rest immobilized in a dreamless sleep, untouched by time, until piercing sirens wake you. The world comes into sharp focus and you see uniformed men and women moving through the room. Many are running and shouting, some have tears in their eyes, and others are on the verge of hysterics. You analyze this human behavior, an unmistakable sign of catastrophe. Finally, someone addresses you and discloses that a powerful earthquake has rocked the California coast and countless of human lives are in need of rescue. Many are trapped beneath the rubble of buildings and bridges, others wedged between the wreckage of cars and city streets. This is where you are needed, and this is where the groundwork being laid by UC Berkeley researchers will transform our world.

At the remains of once iconic cities you begin to work. You communicate with others of your kind, flying overhead or moving through the small spaces between the rubble to detect traces of life. Effortlessly, you move hundreds of pounds of debris, cooperating with uniformed men and women to free survivors from the ruins. Even in the face of devastation there are no traces of fear, anxiety, or doubt running through your mind. Unlike your human colleagues, you were not shaped by millions of years of evolution. You are a robot endowed with artificial intelligence (AI) and engineered with one ultimate purpose: to help humanity.

The future of AI robotics will push the limits of human imagination. The idea of creating artificial people with human-like intelligence and capabilities has been interwoven in mythology, art, philosophy, and science fiction for many centuries. Greek mythology illustrated a world of living gods, humans, and automatons—animated statues built by Hephaestus to serve and protect their human possessors. In the 13th century, the prolific Islamic engineer Ismail Al-Jazari designed a wide range of human-like automata driven by hydraulic energy, which could serve drinks, help in hand washing, and play music. The 20th century brought us science fiction by Isaac Asimov, who famously penned the laws of robotics that controlled his robotic characters and secured the safety of civilization.

The technology behind robotic AI capable of integrating itself into human society is still in its infancy. However, the potential of developing robust AI systems in the future has united groups of scientists around the world with the goal of making this technology beneficial for humanity. An interdepartmental group of scientists at UC Berkeley is part of this collaborative effort to engineer AI, including physically adept robots, that ultimately understands human values. The crux of these AI systems will be the ability to learn about the world and the people that populate it, allowing them to adapt and interact with us. These scientists are not only leading the field of AI—they are influencing the future of human society, which will likely include intelligent non-human counterparts.

A brief history of AI

AI is a marriage of concepts from philosophy, mathematics, neuroscience, and psychology, some of which can be traced all the way back to antiquity. Philosophers like Aristotle developed rules for formal logic, such as deductive reasoning, as a way to translate human reasoning into something structured and mechanical. But many of the momentous ideas that shape AI today originated in the early 20th century. Principia Mathematica was a groundbreaking series on mathematics and symbolic logic, and would go on to influence computer languages. During the 1940s, our understanding of biology influenced many fundamental concepts central to AI. A mathematical model of nervous system activity, published in 1943, contributed to the development of electrical neural networks that power modern AI. Game theory, a mathematical model of cooperation and conflict, and cybernetics, the science of control and communication that is relevant for both animals and machines, were also developed.

In 1956, AI became an official subject of research after a group of scientists proposed an effort to understand intelligence so thoroughly that it could be replicated in a machine. This was followed by the early “golden years” of AI, when digital computers that could manipulate numbers and symbols, an allegory for human thought, were developed. Computer programs that could solve mathematical equations, play checkers, and communicate in English were generated during this time. The golden years were defined by an optimism that overflowed with the promises of an Artificial General Intelligence (AGI) that could rival the intelligence of an average human within decades.

The golden years lasted until the mid-1970s, then came the AI winter, a period when researchers began to grasp the true difficulty of the problem they wished to solve. With limited computing power, limited manpower, and limited funding, the field of AI began to stall. The ebbs and flow of progress in the field continued until the beginning of the 21st Century, when the combination of processing power and machine learning paradigms propelled AI into a new era. We now see AI being integrated into our daily lives. AI programs are driving the behavior of non-player characters in video games, powering voice-activated digital assistants in smart phones, and running apps in our homes that control thermostats and lighting. Advanced AI systems, like self-driving cars, are on the cusp of being released into the world. Yet one of the most exciting applications, autonomous AI robotics, is also one of the most complex to develop. At the forefront of these efforts, UC Berkeley scientists are breaking new ground on emergent AI technologies.

Introducing BRETT, household helper of the future

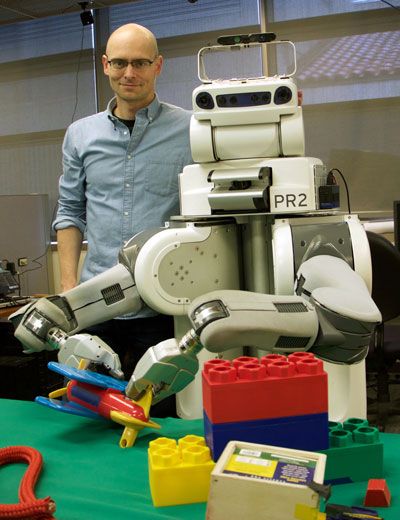

On the seventh floor of Sutardja Dai Hall, one of the most important tools in the Berkeley Artificial Intelligence Lab sits in front of a table cluttered with toys. BRETT (Berkeley Robot for the Elimination of Tedious Tasks) is roughly the same size as its human colleagues, but is still learning the basic motor skills we mastered as children. Its teachers include Pieter Abbeel, Professor in the department of Electrical Engineering and Computer Science (EECS), and his lab, who specialize in developing machine learning algorithms for robotic visual-motor tasks. Non-robotic AI systems have already come a long way since the early golden years, and high-performance speech and image recognition algorithms are all around us. But “in robotics, it’s a little trickier because of the physical interaction with the world,” says Abbeel. In order for AI robots to navigate our world, they need to be able to perceive and manipulate things in their continually changing environment.

With the help of two wrench-like hands and a deep neural network running the algorithms developed in Abbeel’s lab, BRETT has learned to tie knots in a rope, twist caps onto bottles, and fold laundry. Neural networks, like the one BRETT is equipped with, are computational models loosely inspired by biological nervous systems and are made up of processing components, or “neurons” that are connected within a computer network. The network receives an input and each neuron receives a certain stimulation, which it then passes on to the next neuron, until an output is produced. Deep neural networks have many layers of processing components between the input and output neurons, which can generate complex outputs that achieve a wide range of tasks, including motor control.

BRETT and Dr. Abbeel.

BRETT and Dr. Abbeel.

One type of machine learning that Abbeel’s lab endows BRETT with is imitation learning. This works by showing the machine how to perform a task at each step, like tying a knot in a rope. BRETT can watch someone do this and imitate the movements, or someone can physically move BRETT’s arms and hands until it figures out how to perform the task. This type of learning is effective but time-consuming. An attractive alternative is reinforcement learning. Here BRETT is given a task with no instructions on how to actually accomplish it, but BRETT is given a score after each attempt. Think of playing a new video game: the game never tells you how to defeat the bad guy, but your score lets you know how well you’re doing. When you get a low score you try a different combination of buttons, but a high score reinforces the techniques you are already using. So the closer BRETT is to achieving its task, for example, putting rings on a wooden peg, the better its score is. At first most of BRETT’s movements are random, but as its movements get it closer to its objective, it receives a better score. When BRETT receives a better score, the connections in its neural network change. “Over time, the robot will drift toward finding solutions to the problems that it’s supposed to solve,” says Abbeel. This type of trial and error machine learning is ideal for robots that will go out into the world and encounter different physical spaces and will need to perform a variety of motor movements. Rather than creating robots tailored for very specific situations, Abbeel is creating robots that can adapt to new and different environments.

Robots like BRETT have many possible applications, from manufacturing to surgery, but the most motivating application for Abbeel and his lab is robotics for the home. While this is an exciting prospect for those of us who hate household chores, it is surprisingly tough to engineer. The technical challenge is what makes it an attractive goal for Abbeel. “The differentiating factor [between hard and easy robotic engineering] tends to be how much variation there is in the situations that the robot will encounter. A robot folding laundry—that’s very difficult. The pile [of laundry] is always going to be different, and you can’t program a sequence of motions and expect it to succeed. A robot needs to be adaptive to the situation.” Although BRETT has learned to fold laundry in the lab, it is not ready to start cleaning houses any time soon. “We’re at a stage where we’re investigating how we can make reinforcement learning and imitation learning algorithms better,” says Abbeel.

Learning to live with robots

The acquisition of motor skills is only one aspect of AI that is being revolutionized. The robots that populate science fiction seamlessly fit into human society; they learn, make decisions, and interact with the world in a way that is reminiscent of humans. The InterACT lab at UC Berkeley works on generating autonomous AI systems, like self-driving cars and robotic assistants, which can co-exist and cooperate with people. Anca Dragan, a professor in the EECS department, runs the InterACT lab and uses a combination of cognitive science, machine learning, and game theory to explore interactions between AI and people. While much of the work is still in the theoretical stage, Dragan envisions AI robots in our homes, offices, and factories—helping us on our daily commutes, during surgery, and in disaster relief scenarios.

Cooperation between two entities relies on the ability to understand the other’s goals by deciphering their behavior and helping them achieve those goals. To foster cooperation between AI and people, the InterACT lab developed cooperative inverse reinforcement learning (CIRL) in collaboration with Stuart Russell, an EECS professor, and Pieter Abbeel. CIRL allows an AI system to evaluate a person’s actions to understand what they are trying to accomplish and how to help them. “CIRL is an active process. It’s jointly defining the problem and actually accomplishing it,” says Dylan Hadfield-Menell, a fourth year graduate student in EECS. Cooperation also requires coordination and adapting to another’s actions. For example, the InterACT lab has found that a robot can influence the way a person grasps an object and how efficiently the person will carry out the rest of the task during robot-to-human handovers. Coordination is likewise important when AI and people have different objectives, as is the case with self-driving cars sharing the road with other drivers. Here, the lab has shown that AI can estimate intention by gauging responses to certain actions, like a car creeping forward a few inches to probe whether or not the other driver will let them proceed.

BRETT doing laundry, a difficult task.

BRETT doing laundry, a difficult task.

Humans also make inferences about others based on their actions, and the InterACT lab studies how robots can effectively communicate information through their movements. “We can't help but interpret and react to an agent's behavior, so robots need to be aware of how their behavior will be interpreted,” says Dragan. Through physical gestures and timing, robots can communicate uncertainty or confidence, goals, successes, failures, and capabilities. The lab even draws inspiration from animation when trying to reproduce expressive motion. While the InterACT lab does not focus on creating human-like robots, researchers do try to integrate natural movements into their robots by having them imitate human behaviors whenever possible. The goal here is to design robotic AI that people will be at ease around.

“To interact with people, robots need to be transparent” says Dragan, but most people are not accustomed to interacting with a robot. To understand how people respond to and interpret robotic movements, the InterACT lab collects data from human subjects. Surprisingly, these experiments tend to be challenging, “physics is predictable, but people are unpredictable,” says Hadfield-Menell. The results of these types of experiments also depend on a person’s background and experiences. Despite the difficulty in discerning patterns in human experimental data, the InterACT lab is successfully exploring new frontiers in robot-human interaction.

The art of control

Once you have a capable AI system that can plan and carry out actions, the real challenge becomes control. AI research has focused on creating intelligent machines capable of accomplishing their objectives as well as, or better than, humans. However, the resulting behavior may be unpredictable or even detrimental for society. To safeguard our future, organizations like the Open Philanthropy project and the Future of Life Institute have come together to fund the Center for Human-Compatible AI (CHCAI). CHCAI, which launched in the fall of 2016, is a collaborative effort led by Stuart Russell. It will fund the work of UC Berkeley researchers Pieter Abbeel, Anca Dragan, and Tom Griffiths, Professor of Psychology and Cognitive Science, as well as researchers from Cornell University and the University of Michigan. The overarching goal of the center is to design AI systems that are beneficial to and intrinsically compatible with human society. These systems need to be able to interact with humanity in a way that is safe and makes sense to us. “Because the meaning of beneficial depends on properties of humans, this task inevitably includes elements from the social sciences in addition to AI,” says Dragan. Collaborators with expertise in other fields like economics and philosophy will likely be recruited to provide a broader perspective (see “Automating Us” BSR Fall 2015).

Andrea Bajcsy, a graduate student in Anca Dragan's laboratory, is correcting a robotic arm as it hands her a ceramic mug.

Andrea Bajcsy, a graduate student in Anca Dragan's laboratory, is correcting a robotic arm as it hands her a ceramic mug.

Developing beneficial AI systems may seem straightforward, but AI programs do not have the type of experience in human society that we accumulate throughout our lives. In a thought experiment devised by a philosopher at Oxford University, an advanced AI system is given the task of manufacturing paperclips. The AI system will carry out its task more efficiently than humans, and it will use any and all matter it can obtain to achieve its one objective: to produce paperclips. Eventually, a highly efficient AI system will turn all matter in the solar system into paperclips. Without any understanding of what humans value or want, the AI system will persistently try to achieve its objective without any regard for the things we find precious, like human life. “One of the goals is to understand how to communicate what you want and what you’re interested in, and how you avoid accidentally defining something that in the end is detrimental,” says Hadfield-Menell.

CHCAI will focus on designing AI systems, including robots, whose values are aligned with those of humans. How does one design an AI system that really understands what human beings care about? Instead of trying to program individual human values into AI, AI will be designed to learn these values from us. Inverse reinforcement learning (IRL) is an approach that will allow AI to learn about society by observing humans in the real world, watching videos about us, and even by reading about us. “We would like to build systems that have a deep understanding of what people care about, so they can meaningfully improve our lives. Maybe some of that understanding can be built up by just watching our lives,” says Abbeel. Using IRL, AI can observe human action and begin to understand human behavior, resulting in value alignment between AI and people. A basic understanding of human society will also help AI systems determine the correct action to take when following orders, and be flexible when it comes to the inconsistencies and contradictions inherent to human nature.

Intelligently designing AI

By building on the work of our scientific predecessors, these researchers are closer than ever to designing transformative AI systems. Somewhat surprisingly, the principles that define intelligence remain enigmatic. John Searle, Professor of Philosophy at UC Berkeley, uses another thought experiment to demonstrate that receiving “inputs” and generating the correct “outputs” does not equal intelligence. Imagine you are in a room alone with nothing but a book that translates Chinese characters to English. Armed with this book but no personal knowledge of the Chinese language, you receive questions written in Chinese characters through a slot in the door. Using the book, you translate the characters and generate appropriate responses in Chinese, which you then send out of the room. Based on your answers, people outside of the room would believe that you are fluent in Chinese. But the reality is that you simply have an efficient method of generating answers.

Intelligence is an important characteristic of humans as a species. But how do we define intelligence in a machine that is made of electrical components and not biological ones? Alan Turing defined intelligent behavior as the ability to converse in a way that is indistinguishable from a person, what we now call the Turing test. The late Donald Davidson, Professor of Philosophy at UC Berkeley, suggested that human thoughts, and consequently intelligence, cannot be defined by physical laws or rules. Some branches of philosophy emphasize that our intellectual abilities are emergent properties of our animal capacities, which would make endowing human-like intelligence to machines impossible. But if we do get to a point where there are other virtually intelligent, non-biological beings in the world, will humans remain unique?

Throughout our lives, we continuously learn about the world around us and develop strategies for solving practical problems we encounter. One tool humans use is inductive reasoning—the ability to form conclusions from small amounts of data. Simply by observing people or things in the world we can learn languages, make inferences about causal relationships, and understand the motivations behind certain behaviors. Currently, these are all things that we can do better than computers. Dr. Tom Griffiths at UC Berkeley works on creating mathematical models of human cognition, the process by which we acquire knowledge, as an alternative approach to designing AI systems. “If you’re somebody who is interested in AI, you can potentially learn from the only system we have that is really good at solving inductive problems, which is human beings,” says Griffiths.

To generate models of cognition, Griffiths starts with an intelligent activity that humans are good at, like understanding causal relationships or learning a language. Using statistics and machine learning models, Griffiths then translates the underlying computational problem and solution into mathematical terms that can be used to design an algorithm. The mathematical solutions are then compared to data on how people actually solve the problem. The sources of data are either naturally occurring data sets or data generated in his lab and in the labs of his collaborators, like Dr. Alison Gopnik, who studies cognition in children. Griffiths then determines whether his mathematical models accurately reflect what people do in the real world. When a solid model is obtained, it may then be used to bestow AI with a more human-like ability to solve real world problems in collaboration with the CHCAI’s other principal investigators.

AI that can efficiently solve computational problems already exist, like the AI systems that can beat human players at chess and Go, classic strategy games. The definitive challenge is to create a system that can solve a wide range of problems, something more reminiscent of humans. The ability to learn new concepts and new languages would increase the chances of successfully integrating AI systems into our society. In particular, Griffiths is interested in understanding how we acquire inductive bias, the information from our personal experience that we use to learn and form solutions to certain problems. To understand how our inductive biases change throughout our lives, Griffiths collaborates with developmental psychologists, like Gopnik, who study the way children learn and solve inductive problems. They have found that people solve problems differently at different ages due to experience. As we grow and mature, we develop better strategies for solving problems. Interestingly, this makes younger children better than adults at solving certain problems. Because children do not have as much experience as adults to form inductive biases, they are able to think “outside of the box”. For Griffiths, probing the workings of human cognition will never get old; “My goal is not to build AI systems, my goal is to understand people,” he says.

The quest to understand these principles of intelligence may bring about a new technological era. The idea of an integrated world of animal and machine seems like a chapter out of Asimov’s books, but our rapid developments in technology predict a mechanical renaissance in the foreseeable future. Whether it will change our world for the better depends on the work of tens of thousands of AI scientists—and on the willingness of society to support them. Humans have a history of leveraging their limited brainpower to solve complex problems. This is evident in different points throughout time and space: across generations, cultures, and the globe. We have passed down strategies for making tools, fire, and shelters. Our abilities are the foundation on which human society is built, and our cognitive abilities define us as a species. If BRETT has anything to do with it, we may be able to eliminate tedious tasks in the process. And in trying to pass our finite intelligence on, we may learn a great deal about what it means to be human.

Catalina Casillas is a postdoctoral researcher in molecular and cell biology.

Designs and photos courtesy of DJ Shin, David Gorczyca, Ryan Forster, Josh Ellingson.

This article is part of the Spring 2017 issue.