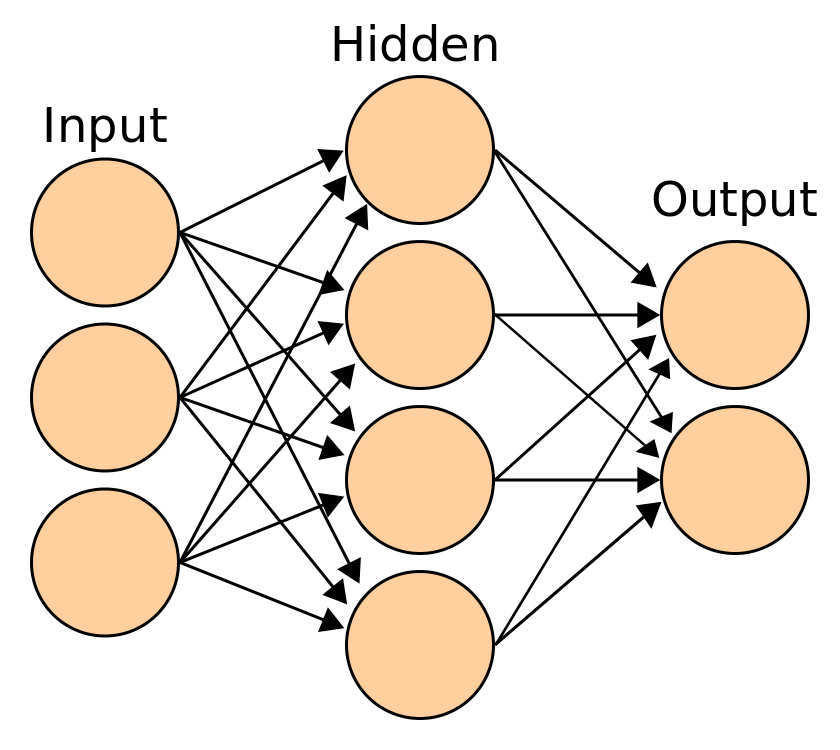

I'm edging into "shill" territory, but the recent rapid progress in neural networks is really worth elaborating upon:

By now, you're probably aware that Google's DeepMind developed a Go algorithm that defeated a professional player in a widely publicized match. You might not know that DeepMind's paper describing their algorithm was accepted at Nature, and is definitely worth reading through.

Honestly, most of the recent papers on DeepMind's publication list are fascinating, but I found "Learning to Learn by Gradient Descent by Gradient Descent" and "Unifying count based exploration and intrinsic motivation" to be particularly cool. The first treats the problem of "how do I updated my neural networks weights" as a learning problem, and manages to beat the previous state of the art update tools (i.e., ADA* and other momentum methods). The second is an improvement over their previous algorithm which learned to play Atari games, and is now able to beat the famously difficult (for AI) game Montezuma's Revenge.

OpenAI released their "AI Gym"--an open source collection of tools to help you build, train, and compare different types of neural networks on different problems. To emphasize how easy to use this package is, Andrej Karpathy wrote a reinforcement learning agent that learns to play Pong in 132 lines of python using the AI Gym API (it's here on github!). Andrej's entire blogpost is full of great links, too. (Also, don't miss Andrej's web demos! (seriously, click on them! they train in your browser!)).

OpenAI have provided a nice list of open problems in AI research. The first is more of a "getting started" research project, and the rest are helpfully sorted by how difficult they expect them to be.

The machine learning subreddit is another great resource for getting up to speed with the latest and greatest neural network algorithms. With the number of different open source implementations of different neural networks growing, it's easier than ever to start learning the field.