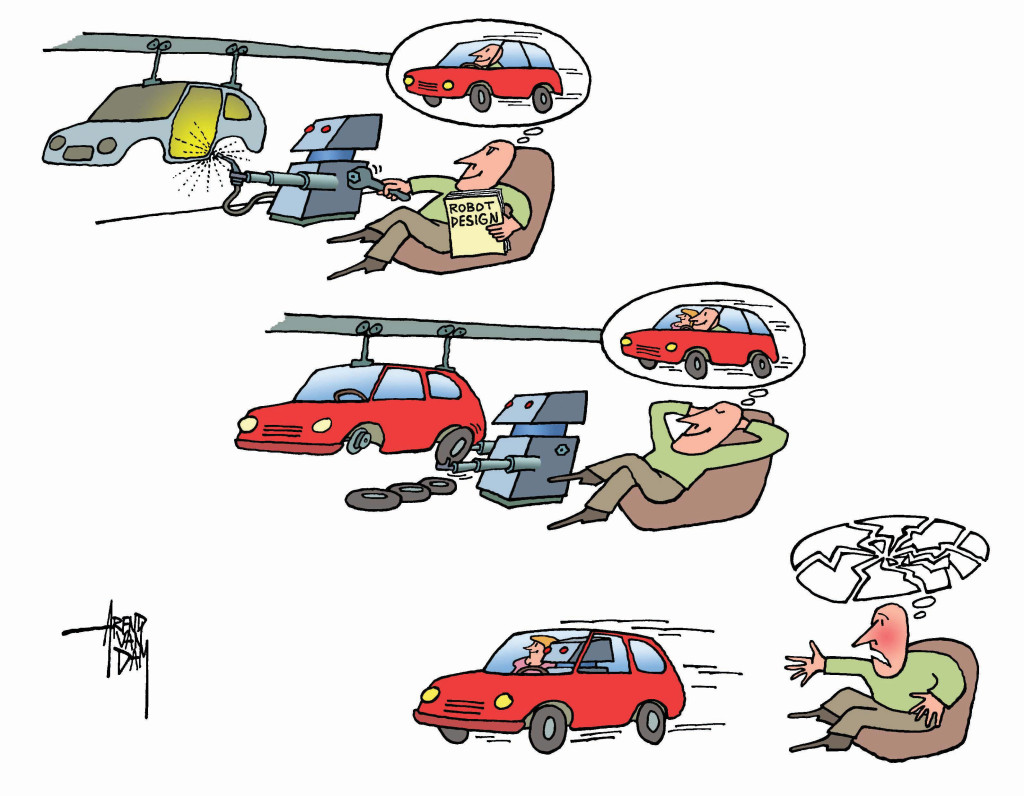

You are heading home to Berkeley after a long day of work in San Francisco, lying back comfortably with your iPad in hand as your self-driving car speeds autonomously over the Bay Bridge. Unexpectedly, the fog closes in and your car issues a warning: “Low visibility conditions. Please prepare to resume control of the vehicle in 60 seconds.” Just as you raise your eyes from the screen, you see a truck cutting abruptly into your lane. With a rush of adrenaline, you reach for the steering wheel and immediately override the autopilot. Your car quickly swerves into the next lane, crashing into a car you had not seen. Who is responsible? The car company recorded your commands to the car and points a blaming finger at you. You disagree, because you trusted that the autonomous car would never allow you to switch lanes unsafely. Yet you chose to override the car in a moment of perceived danger, even though the car supposedly was in control.

Technologies like touchscreens, smartphones and the Internet have already transformed the way we interact with the world around us. However, many of these changes will soon be overshadowed by a dramatic reshaping of the way in which we make decisions and carry out tasks, a shift brought along by the development of automation technologies. From choosing which stock you’ll buy next to designing a living room, and from driving your car to vacuuming your house, intelligent algorithms and robotic devices are increasingly augmenting and mediating our contact with reality. These technologies not only change the way we go about daily activities and open up time for new ones, they also fundamentally reshape the way we think, reason, and behave.

(Credit: Arend Van Dam)

(Credit: Arend Van Dam)

Automation has already triggered a considerable amount of public debate: the safety and liability of self-driving cars, the use of drones as autonomous lethal weapons, and the replacement of labor by robots and computer programs are only the beginning of a long list of concerns. People are increasingly worried about how these new technologies will affect their lives.

However, the impact of technology on society is not unidirectional. Society and technology evolve together and shape each other. As humans and machines share more, and more complex tasks and decisions, it will become harder to split a system into “human” and “machine” and analyze each in isolation. A multidisciplinary conversation at UC Berkeley has begun to tackle two key questions regarding the intricate relationships between automation technology and society. First, how can we measure and understand the social and ethical implications of technology? And second, how and to what extent should scientists and engineers explicitly deal with the implications of their work?

Benefits and risks

More and more companies and governments are embracing the use of automation. Techniques such as predictive modeling, artificial intelligence (AI), and machine learning are increasingly used to replace or supplement human labor and to automate decision-making processes. Ten years ago, self-driving cars, drones, and AI were still high-risk research projects primarily conducted by universities. Today there is broad agreement that automation can and will offer novel ways to solve challenging societal problems. Unmanned aerial systems, popularly known as drones, can be used to help first responders determine viable rescue and recovery strategies in inaccessible terrain following natural disasters. Cars with autonomous capabilities can help reduce traffic congestion and greenhouse gas emissions and drastically diminish the number of car accidents (the leading cause of death of young people in developed countries). Robotics and automation technologies have great potential in a wide range of domains, and capabilities and applications have grown dramatically in the last decade.

Throughout her half-century career, Ruzena Bajcsy, a professor of robotics in the Department of Electrical Engineering and Computer Sciences at UC Berkeley, has envisioned a clear goal for automation: to empower people and improve their productivity. An experimentalist at heart, Bajcsy spent the 1970s and 1980s at the University of Pennsylvania pioneering the field of active perception: teaching robots to react to what they saw and decide where to look next. This goal seemed out of reach a couple of decades ago, when measuring and pushing information in and out of computers was a slow and arduous process. But recent developments in hardware have changed everything.

“Initially, computers were designed for supercomputing applications in fields such as nuclear physics, which mainly required pushing data from memory to processor and back as fast as possible,” explains Bajcsy. “They were not suited for controlling robotic systems for which communication and dynamic feedback interactions matter.” The consumer market played a major part in shifting the role of computers. As people started adopting personal computers and the Internet, the costs of hardware and computing decreased, which enabled further development of communication technologies. This self-reinforcing cycle led to the adoption of interactive devices that have far broader applicability than just the needs of consumers. “The fact that everyone has a cell phone that can communicate with a server has helped us,” says Bajcsy. “The reason for the technological revolution in robotics is that sensors and input-output devices are now much more available and interfaceable.” While these changes are accelerating the rate at which engineers can design and develop new systems, they are also rapidly transforming the way in which we behave and interact with the world around us.

As excitement increases among users, developers, and potential beneficiaries of automation, a growing community of public leaders and experts is focusing on its risks and unintended consequences. In 1960, the renowned philosopher and mathematician Norbert Wiener, an early influential advocate of automation, pointed out that “machines can and do transcend some of the limitations of their designers, and in doing so they may be both effective and dangerous.” Since last July, over 19,000 people (many of them researchers in AI and robotics) have signed an open letter taking a stance against the use of AI to develop autonomous weapons. The signatories were headed by Stuart Russell, a professor of AI in the UC Berkeley Department of Electrical Engineering and Computer Sciences, physicist Stephen Hawking, and tech entrepreneur Elon Musk, among many others. The letter anticipates that if an AI arms race is pursued, autonomous weapons will be mass produced at low cost by all significant military powers, and argues that “it will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc.”

Ruzena Bajcsy and Cathryn Carson at UC Berkeley, and Jeroen van den Hoven at Delft University of Technology in the Netherlands, research the entanglement of society and automation. (Credit: Bajcsy: copyright Peg Skorpinski/UC Regents, 2014; Carson: Berkeley Institute for Data Science; Van den Hoven: Sam Rentmeester)

Ruzena Bajcsy and Cathryn Carson at UC Berkeley, and Jeroen van den Hoven at Delft University of Technology in the Netherlands, research the entanglement of society and automation. (Credit: Bajcsy: copyright Peg Skorpinski/UC Regents, 2014; Carson: Berkeley Institute for Data Science; Van den Hoven: Sam Rentmeester)

But weapons set loose are not the only potential danger. Automation technologies have touched the lives of millions, yet most users have very little understanding of their underpinnings. This can make it hard to know what to do or how to behave when something goes wrong. What if the anti-lock braking system of your car suddenly stopped working as you slammed the brakes on the highway on a snowy day? Without this smart system, would you still remember how to safely bring the car to a stop yourself?

Similar and perhaps more worrying situations have happened in the skies. When the airspeed sensors failed on Air France flight 447 in 2009, the autopilot disengaged. At this point, the pilots should have assumed control and kept the aircraft flying on its current course. Instead, bewildered by the unexpected behavior of the automation and the inconsistency in the sensor readings, they made abrupt and inappropriate control decisions, ultimately causing the plane to crash into the Atlantic Ocean. The pilots were well-trained to fly the Airbus A330, but the interplay between the humans and automation failed catastrophically, with fatal consequences for everyone on board. Shortly thereafter, in 2013, the Federal Aviation Administration (FAA) concluded that pilots had become too dependent on autopilots and other computerized systems. The FAA sent out a notice to all airlines warning them that overuse of flight automation could erode the pilot’s ability to quickly recover the aircraft from an undesired state and urged airlines to promote manual flight when appropriate.

What this tells us is not that automation is a bad idea, or that automation systems are inherently dangerous—in fact, automation on board passenger airplanes is one of the key reasons that air travel is considered the safest mode of transportation, with less than one death per 10 billion passenger miles. Rather, it suggests that we need to explicitly account for how people and automation work together, and how these interactions can lead to unexpected pitfalls. A lack of understanding of automation and its effects on our own behavior can result in feelings of unease or mistrust towards these technologies, especially when things don’t go as they should. Bajcsy stresses the need to “convert our formal work into more popular language so that the common man and woman understands what we are trying to do.” When creating technologies with potentially wide impact, engaging the public is crucial. At the same time, it is equally important to solidify our understanding of the potential risks and unintended consequences of technology.

The good, the bad, and the unintended

Elucidating the consequences of technology is not easy, even for the people who designed the technology in the first place. Cathryn Carson, a professor of history of science at UC Berkeley, was working with engineering students on the societal implications of nuclear power when the 2011 nuclear disaster in Fukushima struck. It was a pivotal moment for Carson, who collaborates with nuclear engineers and data scientists by helping them engage with societal issues related to their work. After the disaster in Japan, she was surprised to find that engineers often feel powerless when facing societal forces, especially as unintended consequences unfold. “I had assumed that social scientists were the only powerless ones,” she wrote.

Engineers are often educated under a paradigm that leads them to believe that by methodically and diligently designing a system, one can control its outcomes. This may be a safe assumption for many engineered systems, such as bridges or power plants—until a tsunami floods the emergency generators meant to prevent core meltdown, or a strong wind causes a suspension bridge to oscillate until it collapses (as was famously the case of the Tacoma Narrows Bridge in 1940). Nature finds creative ways to trump our best efforts, but human beings can be just as unpredictable. As automation technologies become more intimately coupled with our daily lives, the traditional assumption that humans are external to the engineered system breaks down. However, quantitatively modeling the cognitive, behavioral, and social characteristics of a system is an extremely intricate task—one that can be daunting for engineers, whose training and expertise most often lie elsewhere. “You [engineers] can do it based on your own intuitive sense, but there is no theory that you seem to have,” reflects Carson. “I wonder how you grapple with this ‘unchartedness’ of the territory.” This challenge calls for engineers to join efforts with experts from different disciplines. “We really have to penetrate the social sciences,” agrees Bajcsy.

A physicist by training, Carson has investigated the roles of science and technology in Western society throughout the 20th century, a relationship that she refers to as a set of entanglements. “I try to avoid talking about implications of technology,” she explains. “Even finding the right language is a key issue; framing the problem is part of the challenge. I use the word entanglement because it’s not about causes and implications, it’s about the embeddedness of all of these things in each other.”

Understanding this entanglement is especially critical when technology is used maliciously. Innovators may have good intentions, but with technology distributed in electrical grids, homes, and back pockets, the possibility of causing direct harm to people or infrastructure is serious. Recently, an American engineering student uploaded a YouTube video showcasing a quadrotor helicopter carrying a handgun that could be fired remotely by the user, effectively turning the vehicle into a remote-controlled killing machine. The video already has over three million views and has stirred up considerable unrest. In 2013, the design for a 3D-printable single-shot handgun—named the “Liberator”—was made freely available online. Even though the US Department of State reacted within two days of the initial release, forcing the designer to retract the plans, by then the blueprint had been downloaded over 100,000 times. The attempt to control its spread was futile, as the plans can be found online today. Neither quadrotors nor 3D printers are intrinsically offensive or lethal technologies, yet they can relatively easily be turned into tools to facilitate violence. At the same time, Bajcsy believes it is important to avoid assigning intrinsic moral value to technologies like drones, 3D printers, or robotics. “I think we need to explain to the public that what we are developing is general, and can be applied in many good ways and bad ways, like anything else.”

Unintended consequences need not be violent or due to technological misuse to cause serious concern; they can emerge simply as side effects of automation. In their book The Second Machine Age, Erik Brynjolfsson and Andrew McAfee, experts in technological progress, lay out their vision of a future in which almost all tasks that can be programmed will be taken over by automation and robots. Bajcsy has long worried about such a future, asking: “As we replace jobs with automation, how should the benefits of that automation be distributed?” If robots not only take over physical labor, but also mental labor—such as administration, analysis, or communication—there may be a decrease in the overall demand for labor. Could this lead to an economy in which all or most of the benefits go to whoever owns the robots and writes the algorithms? And do we want to wait to find out?

Technology sometimes gives rise to ethical dilemmas and draws us into completely uncharted territory. Japanese researchers recently studied what happens to robots operated in a public space and found that children tended to bully the machines. The researchers not only documented this bullying behavior, but also used their insights to help the robot avoid abusive situations, which more easily arise in groups of children with no adults nearby. This example might sound innocent, but it highlights how the advent of advanced robotics and automation will soon require us to face questions that we could not meaningfully pose before.

What might happen when people form emotional bonds with their robots? Recent studies have revealed that people experience gratitude toward their Roomba robotic vacuum cleaners and feel motivated to clean for them so they can take a break. A military test of a mine-defusing robot was aborted in 2007 because the colonel claimed it was “inhumane” to watch the machine attempt to carry on after having been damaged by successive explosions. These are not robots meant to evoke any form of emotional response, yet people naturally project intentions and feelings onto them. What will happen when personal care robots, or even robots meant to emulate sexual or emotional partners, hit the market? “When robots interact in society—and they always will—they should be programmed so that they shouldn’t hurt people,” Bajcsy argues. But hard-coding morality into machines is not enough, she says. “How do we train and educate people to live in this mixed society?”

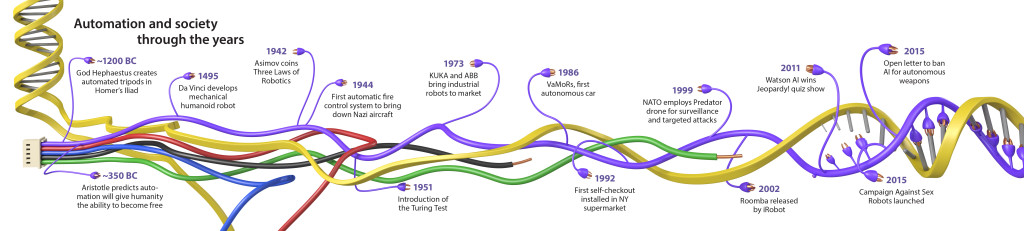

Click image to enlarge. (Credit: Florian Brown-Altvater)

Click image to enlarge. (Credit: Florian Brown-Altvater)

Reining in the carousel of progress

To what degree can we steer, or prevent, technological development to avoid undesired consequences? Most agree that while certain limitations may be enforceable, there are also limits to what we can limit. “I don’t think you can stop technological development,” Bajcsy argues. “If you don’t work on it, five other people will. The question is, can we at least raise consciousness of the social consequences among the developers?”

In the past, other game-changing technologies have undergone regulatory change after the introduction of academic ethical standards or political agreements between countries. In 1975, at the Asilomar Conference on Recombinant DNA, researchers self-organized to establish a set of safety guidelines for genetic experiments to avoid accidental creation of biohazards. In the disarmament process after the Cold War, political agreements between the US and the USSR prevented a further arms race in thermonuclear weapons that could lead to a doomsday scenario. When they cut funding at the national level, these two countries effectively stopped nuclear warfare research in the only military powers that could conduct it at the time; however, as new players have come into the game in recent years, concerns about nuclear warfare are resurfacing and the research community has started to mobilize again.

The Asilomar Conference showed that a research community can guide government and society, defining the boundaries of what should and should not be tolerated. “There were concerns among the scientists that if they did not come up with decisions themselves, they were going to be put forward by non-scientists less knowledgeable about their field,” Carson explains. This resonates with Bajcsy’s thoughts comparing the discussion around nuclear enrichment with that around autonomous weapons. “Our problems in automation are very similar but also very different. Nuclear engineering has a much more centralized control, whereas automation technology is much more dispersed and accessible to many, including those who are adversarial or uninformed.”

The distributed and subtle nature of such technologies became evident in the recent Volkswagen scandal, which may affect the owners of more than 11 million vehicles. The company programmed its diesel cars with automation software that enabled the cars to recognize diesel emissions tests, and to subsequently manipulate their engine control to reduce emissions. If even the most respected enterprises are misusing automation technology, how can we make sure that what we consider unintended or unethical does not become common practice?

Given the distinction between centrally controlled and highly dispersed technologies, when is it critical to introduce rules or limitations to understand and control the effects of a new technology? Jeroen van den Hoven, a professor of ethics and technology at Delft University of Technology in the Netherlands, studies moral dilemmas in technology and innovation. He explains a crucial phenomenon in technological development known as the Collingridge dilemma: “At a very early stage of developing your technology, you have an incredible amount of freedom, but you lack information; you need feedback. Further in the process, the degrees of freedom go down as your technology is already out there being used, but you get information back. You need to find an optimum in developing technologies, where you still have enough freedom to design your system in different ways, but also get feedback to inform design, implementation, or decisions about utilization.”

This dilemma is especially challenging when trying to regulate a fast-moving technology. By the time it becomes clear what needs to be done, it might already be too late, as in the case of the 3D-printed gun. If autonomous systems are so easy to build and operate, how can we hope to stay in control as a society? “Applying the international treaties for armed conflict is extremely difficult in the case of autonomous systems,” Van den Hoven admits. “The enabling software within a weapon system might be fairly innocent when implemented on a different type of platform.” This is a problem inherent to general-purpose technologies. An artificial intelligence breakthrough that could allow doctors to reliably detect cancerous tissue in a patient might, in a different context, enable an intelligent weapon to decide which persons, vehicles or buildings are imminent threats and therefore valid targets. Should this cancer-detecting technology be developed? On one hand, it is hard to argue against developing an algorithm that can be used to save many lives; on the other hand—as the signatories of the manifesto against autonomous weapons indicate—we cannot assume that our technology will never fall into the wrong hands.

Anticipate and integrate

Regulation will most likely be put into place to prevent harm, but it remains to be seen what can be done to address ethical challenges more proactively. Given the concerns and scenarios we have discussed, it seems important to anticipate the potential interactions of a new technology with society.

(Credit: Arend Van Dam)

(Credit: Arend Van Dam)

This year, Stanford University began a project called the One Hundred Year Study on Artificial Intelligence, a long-term program that aims to conduct an interdisciplinary and comprehensive study on the multifaceted effects of AI on society, including economics, privacy, law, ethics, crime, warfare, and autonomy. Because it is difficult to anticipate all the issues and opportunities of AI, the study must be ongoing. Continually assessing the outcomes of developments in AI will hopefully allow researchers to better anticipate ethical challenges in the future.

Carson is one of many who argue for considering the societal impact of technology, and integrating these predictions into research and design. She says, “I find it helpful to focus on the process, rather than trying to scope out what the outcomes might be. I figure that the more strongly you build the research or design process, the more likely you are to get to a good outcome.” Van den Hoven is spearheading a community in Europe that centers on the concept of Responsible Innovation (RI), an emerging research field that tries to integrate the assessment of the societal and environmental impacts of technology during the research and innovation process. “Innovation is about releasing new functionality into the world,” he says. “The first question you have to ask yourself is: is this really a good thing?” And there are many questions to ask for any new technology: “Have you done your research well? Have you spoken with parties who are directly or indirectly affected by this technology? Is the technology the best expression of the things we care about?” Although still a young field, some of its insights have convinced the European Commission to integrate RI practices into their grant programs.

An important ingredient in both of these initiatives is the collaboration between practitioners working on the technology and experts from traditionally less-involved fields such as law, policy, technology assessment, ethics, and philosophy. It remains to be seen whether existing barriers, especially among scientists and engineering researchers, will allow embracing such new initiatives. “It is not yet mainstream; not part of the routine and regular thinking. We will have to invest incredible amounts to educate people and change mindsets,” Van den Hoven asserts.

Changing a culture is perhaps one of the most challenging and slow-moving processes we face in life. We believe the socially involved melting pot at Berkeley is an ideal place to start. “Wouldn’t it be cool,” ventures Carson, “if one of the features of Berkeley Engineering were that it really tapped into these issues? And even if it is not in the form of curriculum, could it become part of the Berkeley signature of what it means to be an engineer who graduated from Berkeley?”

It’s up to the automated us

We are seeing the dawn of a fascinating time, when automation technologies, powered by human ingenuity, will permeate many aspects of our everyday lives and empower us to achieve things we can hardly imagine. It will be up to human judgment, good faith, and common sense to channel this immense potential for the benefit of society and individuals.

Engineers and scientists will not do this alone. Nor will ethicists or sociologists. At UC Berkeley and elsewhere, a conversation needs to happen with insights flowing in all directions. Some may argue that any attempt to control the future of automation is doomed to fail before it starts—that technology cannot be stopped or even steered. And yet, as we have seen, nothing could be farther from the truth. Technology is not out of human reach; it is intimately entangled with all aspects of our lives. If embraced and understood, this entanglement can, and should, be used in our favor. “Technology isn’t what makes us ‘posthuman’ or ‘transhuman’,” writes author Nicholas Carr in his book The Glass Cage. “It’s what makes us human.”

The responsibility to morally guide our automated future—the automated us—lies on all of our shoulders, and we are all part of this discussion. Sharing different perspectives from all over campus would be an exciting place to start. “There will always be a group of people on the boundary who are open towards the other side,” Bajcsy reflects. “To my mind, our hopes lie with that group.” We hope to meet you at the boundary.

The authors encourage anyone interested in joining the discussion to reach out with comments and ideas at automating.us@berkeley.edu

This article is part of the Fall 2015 issue.