Catch the brainwave: Cognitive Technologies at the Exploratorium

February 6, 2015

All images courtesy of Anja Ulfeldt

Getting his ticket to the Exploratorium on the opening night of the new exhibit Cognitive Technologies, Berkeley neuroscientist Jack Gallant was asked if he thought it would work. “It better work,” he said. “It’ll be amazing if it works.”

Gallant’s actual words were, characteristically, a smidge more colorful. After all, his students had worked hard for weeks on the exhibit, the Exploratorium’s very first created by an outside group. They joined forces with the Berkeley-based Cognitive Technology group, an incubator whose stated goal is to create tools to better understand, extend, and improve the human mind, and m0xy, a community-based center for industrial arts in Oakland.

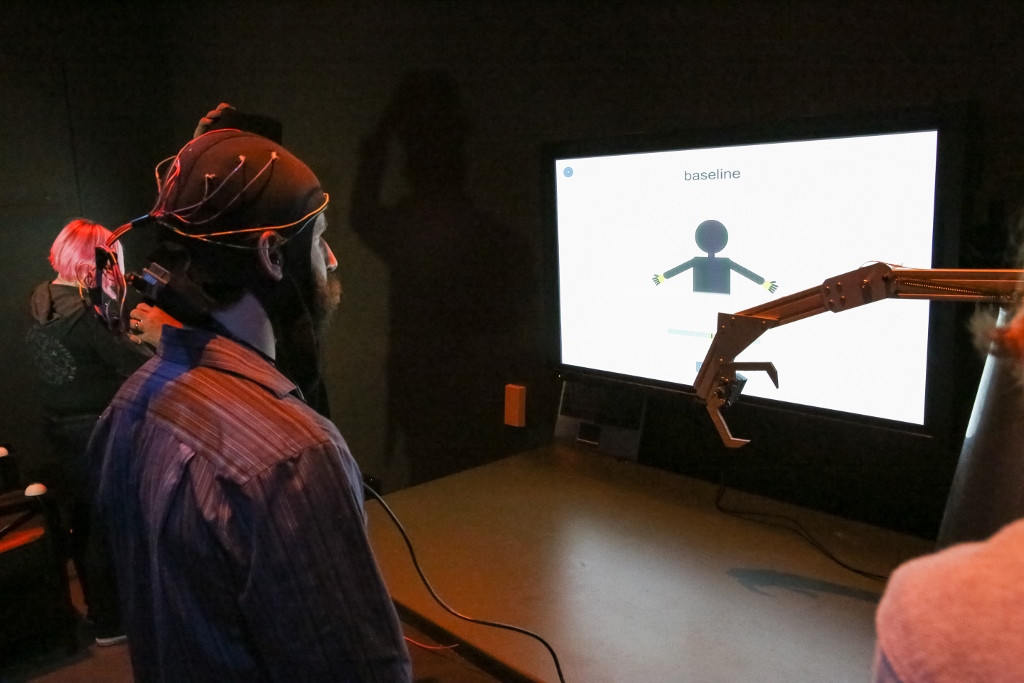

On entering, guests are fitted with headsets that read their brain waves. At the Calibration Station, they open and close toylike lotus sculptures by switching among targeted brain states like relaxation, excitement, and focus. Guests can then explore gorgeous brain data visualizations with hand gestures, solve an illuminated sculpture-puzzle by focusing, or learn to control a robotic arm by imagining movement. “No Magic Here,” a plaque announces, explaining that the EEG (electroencephalography) headsets pick up brain waves from the surface of the head.

The room is aglow with LEDs and aflutter with excitement. In a corner, an armchair invites headset-wearing guests to learn to change their emotions on demand. Elsewhere, people don Oculus Rift virtual reality headsets, one of which allows them to navigate the brain’s maze of blood vessels from the point of view of a clot-busting white blood cell. Some people instead take advantage of opening night to pick the brains of the experts.

Two such experts are James Gao and Natalia Bilenko from the Gallant lab. They were recruited to work on the exhibit by Stephen Frey, director of Cognitive Technologies and a fan of Bilenko’s work on Dr. Brainlove.* For the Exploratorium, she and Gao worked to make interactive visualizations of the brain with Gao’s software, Pycortex.

The Gallant lab has been making splash after splash in the world of neuroimaging. They have worked out how to perform what is essentially a scientific magic trick. By showing people pictures and videos while they have their brain scanned, they train computer algorithms to recognize the ways in which visual information is encoded in the brain. Then, like a magician pulling the correct card out of a deck of cards, their algorithms can decode brain activity in future scans to guess what people are seeing. It’s not quite mind reading, but for many, it sure seems close.

“I'm hoping to teach people a little more about fMRI (functional magnetic resonance imaging) research, and how it really isn't magic,” says Gao. Huh. A whole nightclub’s worth of blinky lights in that room, and no magic. Go figure.

“With fMRI, we’re not even recording from neurons,” Gallant would want me to note. Gallant is notoriously precise in describing his work. “It’s blood flow. We’re measuring blood flow,” he says, often. True, fMRI tracks changes in blood flow in the brain, but fortunately, we know that rushes of blood to the head are just smoke signals from an underlying neuronal fire.

There is, however, a more fundamental limitation to telling someone what they are thinking: That person will always know better than you. There are many reasons why this will always be so, starting with the fact that subjective experiences are generated by a brain containing more connections than the entire internet.

Another reason is that with brain recording techniques, there is a tradeoff between convenience and accuracy. The less invasive a technique is, the muddier the signal it gets. And since nobody just volunteers to have their skull cracked open right there on the museum floor, we must take what we can get. The Exploratorium exhibit relies on lightweight, wearable EEG headsets donated by Muse. When a guest leaves, museum attendants can simply sterilize their headset like a pair of bowling shoes, recharge it, and hand it off to the next neuroadventurous guest to walk in. Great for walking around, not so great for clean signal. This tradeoff is a major bottleneck in moving forward with much-hyped, futuristic projects like neurogaming, for instance, turns out to be literally getting it through our thick skulls.

Overhead at the Exploratorium, guests’ brain waves were plotted as part of the Cloud Brain project. Two museum-goers stood below, regarding the screen with no small amount of confusion. “Does this mean I’m not really thinking anything?” one asked. “I must be at like a yoga retreat now or something,” said the other.

Although it may not seem like it, these guests were on to something. The mismatch between their lived experience and their recorded brain waves could have been a hardware problem, rooted in the limitations of the EEG headsets. But let’s pretend for a moment we did have perfect data. Still, to really understand what we’re recording at all, scientists have to make connections between different sources of evidence to make inferences about what is really going on. “Our results are based in tested reality,” says Gao. “For example, damage in brain areas that show an fMRI response to seeing faces actually causes real deficits in face perception.”

Interpretations of the data rely on a boiled-down version of a vast literature--we are standing on the shoulders of giants. Any individual’s headset experience is classified as meditation, excitement, or focus based on what’s been seen in past experiments. As a single datapoint at the end of a very long running average, your mileage may vary. They did warn you there would be no magic.

John Naulty, a member of the Cognitive Technology group, spent opening night affixing electrodes to people’s heads to allow them to control a robot arm. “We wanted to get people to think about this kind of technology, see where current prototypes are, and if they’re interested, help us make them better,” says Naulty. “There is a lot of room for improvement.”

And yet, many of the technologies in the exhibit are at the bleeding edge of what is possible in the laboratory, let alone in the wild. Guests get the first-person experience of putting on a headset and seeing their mental states projected out onto the world. They get to ask whether what a computer decides is calm is really calm, whether their focus is really focus. Guests line up the data with their own best working models and give them both the stink-eye, and before they know it, they are playing scientist. They leave with a new perspective on where we stand in understanding and controlling the brain. We know a lot, but we don’t know everything.

Hardware and software issues aside, what I found most impressive was the scientists’ sheer dedication to making these technologies accessible. The exhibit came together beautifully in the end. Volunteers from UC Berkeley, the Cognitive Technology group, and m0xy will continue to man the exhibit for the next month. “These people are passionate, and it's contagious,” says Naulty.

“The biggest challenge for making the demo museum-ready was making it free-standing for a whole month with no intervention,” says Gao. “The interface has to be easy enough to use that someone can walk up with no previous knowledge and be able to work the exhibit.”

“James ran desperately into lab today to grab something or other off his computer,” Gallant helpfully confirmed on opening night.

I once read a super impassioned screed on the Muppets’ shift towards computer animation, and believe it or not, I think it’s relevant to the experience of interacting with these technologies in a museum and not just on youtube. Of the irreplicable thrill of the old-school Muppet experience, Elizabeth Stevens writes, “As viewers, we know on some fundamental level that what we are seeing is not CGI, but a room full of exhausted, exhilarated professionals with their arms stuck inside of puppets.”

Cognitive Technologies creates a similar thrill, which one of the exhibit’s creators summed up beautifully in a Facebook post after opening night. Mike Pesavento, who with Bilenko gave Dr. Brainlove her EEG functionality, had worked to bring beautiful electron microscopy images and an Oculus Rift demonstration to the Exploratorium. He writes as only an exhausted, exhilarated science hero elbows-deep in Arduino boards could:

An amazing brain trust of artists and infrastructure and software devs and neuroscientists and hardware hackers got together 8 weeks ago and created an exhibit at the world famous Exploratorium. Bugs were being fixed in the last seconds. A superhero rewrote an entire backend in the last 48 hours to support 20 eeg headsets simultaneously. Partners from around the US reached out to help support this exhibit. Nerves were frazzled, tech didn't work as expected, no one ate (there was whiskey!), and yet it came together. So amazingly happy to be a part of creating the Cognitive Technologies exhibit at the Exploratorium.

Cognitive Technologies runs Thursdays through Sundays from 1-4 and Thursday evenings from 6-9, through February 28th at the Exploratorium.

- For those of you keeping score, it’s true, I only cover brains and blinky lights.