Reproducible and collaborative: Teaching the data science life

June 11, 2014

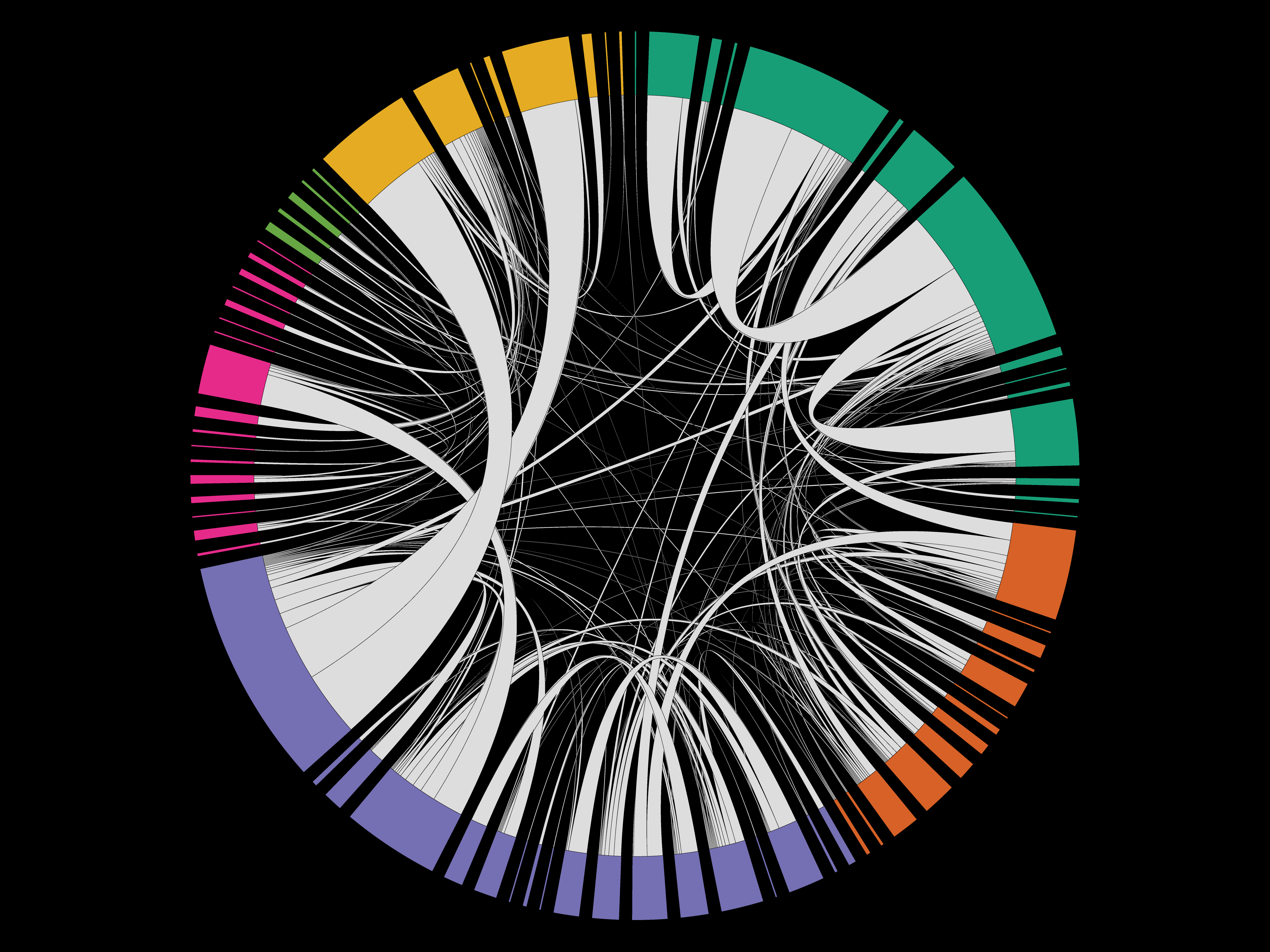

Feature image above courtesy Natalia Bilenko, Data source: PubMed

The Spring 2014 issue of the BSR made a splash with Anum Azam’s feature piece, ‘The First Rule of Data Science.’ For many PhD students in the sciences, grim job prospects in academia make the advent of data science seem like a godsend. And so far, blasting the surfeit of PhDs at Big Data like so much buckshot seems to be working out---scientists, after all, are innovators. But, as the field evolves, it will become important to think about how to train a new generation of data scientists. Like Whitney Houston, I believe the children are our future. It is up to us to teach them well and let them lead the way.

Housebreaking the puppies

Philip Stark, a professor of statistics and the department chair here at Berkeley, puts it a slightly different way: “It’s hard to teach old dogs new tricks, so let’s work with the puppies. What are new tricks for my generation are basic housebreaking for this generation.” Stark, along with Aaron Culich of the recently-launched Berkeley Research Computing program (BRC), tested the waters of data science pedagogy with their 2013 fall semester course, Reproducible & Collaborative Data Science (STAT 157). “The idea,” according to Stark, “was to have a project-oriented statistics class that was geared towards solving a real-world scientific problem.”

But the first rule of data science, as Azam wrote, is that you don’t ask how to define it. The very ethos of data science runs counter to the way most stressed-out undergraduates study. In most classes, the point is to race to the top of the curve, so most students appreciate clearly defined goals in the form of syllabi, deadlines, review sheets and rubrics. Anyone who has taught a course at Berkeley will tell you they’re all too familiar with the “Will this be on the final?” mentality---Stark included. In fact, an attempt to visit willthisbeonthefinal.com will redirect you to Stark’s homepage. “The way that our educational system is set up right now really does focus on the individual, the individual’s performance, the individual’s ability to either synthesize or regurgitate or master some corpus of material,” Stark says. Data science, however, requires a more collaborative approach.

Collaboration and reproducibility are inextricably linked. “The first step to making science reproducible is to build good habits,” Culich explains. “Your most important collaborator is your future self. It’s important to make a workflow that you can use time and time again, and even pass on to others in such a way that you don't have to be there to walk them through it.” So how did they teach the skills required for collaboration?

Lofty goals, and no road map

In class, students’ goal was to use data provided by the Southern California Earthquake Center to create a better way of predicting earthquakes. This goal had no clear road map that could be broken down into simple steps and thrown onto a syllabus. Students knew that sufficient progress could lead to a publication, the holy grail of scientific research, but it wasn’t clear how to get there. “It was a little bit hectic in the beginning,” says Christina Ho, a student in the class. “I think a lot of us weren’t really sure what we were supposed to be doing.”

Culich would concur: “Because our goals weren’t laid out for us step-by-step, one of the first things we had to do was define them, and so we had to just jump right in and talk.” Starting with the basics: what are the units? What was recorded? How was it measured? What do we mean when we say "magnitude?" Then, students were faced with decisions: how to transform, clean, and normalize the data into something meaningful and usable. Finally, they thought about how to turn data into evidence by applying the information they had towards solving a problem. And every step of the way, they had to work reproducibly. “We were willing to go super slowly and make slow progress in our science just to make sure that our work was easy for someone else to pick up,” says Teresa Tenfelder, a student.

Working reproducibly: the wave of the future

Reproducible computational research is a concept that can be traced back to John Claerbout, an emeritus professor of geophysics at Stanford. By insisting that his grad students work reproducibly, he enabled new students to become productive in 1-2 weeks instead of the common 1-2 years: they could build on the work of the previous cohort almost immediately. Before the emphasis on reproducibility, incoming students spent far more time and effort figuring out where their predecessor had left off. This timesuck, Claerbout thought, could be reduced if people developed good habits in the way they coded---producing code that was well-documented, could run on any platform, whose function and outputs were clear given a well-defined set of inputs, and that could be modified and extended easily. In short, code should be geared towards making results reproducible.

Reproducibility lies at the heart of the twin zeitgeists of data science and open access, currently revolutionizing the way science is done. “It’s not just what you know anymore, it’s how you work,” as Stark puts it. “We have largely abandoned the scientific method, especially in ‘big science.’ We’ve traded ‘show me’ for ‘trust me.’ The whole point of science is that you shouldn’t have to trust the experts. Some people are afraid of working reproducibly---exposing their work---because others might find a mistake. But here’s a different way to think about it: If I say ‘trust me’ and I'm wrong, I'm untrustworthy. If I say ‘here's my work’ and it's wrong, I'm honest and human.”

In the end, the class implemented a predictor that was much simpler than the current state-of-the-art Epidemic-Type Aftershock Sequence (ETAS) model, and whose performance was almost indistinguishable from that of ETAS. And their software pipeline was automated and deposited in a github repository, so anyone else can easily pick up where they left off. That is an important contribution to the field, and one that can be learned and built upon quickly because of the students’ attention to detail.

Working collaboratively instead of beating the curve

Working reproducibly presented one set of challenges, while working collaboratively presented another. Realizing that harmonious, effective teamwork doesn’t simply happen, Kristina Kangas, a graduate student in Integrative Biology and the GSI for the course, administered the VARK questionnaire. This test draws on principles of team building, identifying students’ distinct preferences, motivations, goals, and working styles. The results were used to help students find roles that were right for them. This approach allows room for innovation from a group of individuals with diverse intelligences, differing markedly from typical courses where students must all learn the same thing.

Students became data curators, analyzers, visualizers, or presenters. As goals and plans emerged, groups evolved. Some students were more comfortable than others in the role of thinking through the big picture. Other students found themselves in the "I just want to be told what to do" camp. These students often had a skill to bring to the table, such as being a python wizard, or having a strong background in statistics. Many students stepped into the roles that they felt comfortable in. Others took on roles outside their comfort zone that provided the training they sought.

To create a reproducible data analysis pipeline, teams had to have conversations about how the pieces would be handed off from one person to another. Was a data curator getting the data the analyzer needs? Was it in a usable format? Even deciding who to talk to about handing off one’s portion could be a challenge. “Working with people is hard,” Tenfelder confesses. “The collaboration was the most difficult, but at the same time, the most valuable, aspect of the class.” Seeking opportunities, discussing plans, and building collaborative relationships are skills that are crucial to success in a data science career, and yet these skills are seldom taught in the classroom. In the end, Jody Zhang, a student, found it worthwhile, adding that “the frustration turned into an opportunity to really learn and grow a lot.”

Culich believes that what students learned about teamwork in data science closely matches what he learned from a youtube sensation known simply as Dancing Guy. The dancing guy shows us what it is to be a leader. First, a leader must be easy to follow. And second, a leader understands the value of their first follower. Without them, the leader is just a lone nut who isn't connecting to anyone. In a rapidly evolving field like data science, identifying good ideas and cultivating good work relationships can turn out to be just as important as cultivating good work habits.

Preparing students for jobs in a changing climate

“At a minimum, students learned good work habits and how to learn new programming languages,” says Stark. “I hope the experience prepared them to work in an environment where they’re just told, ‘Here are some tools. Now go be brilliant.’” Code reviews, where students discuss a snippet of code, were a way of learning brilliance by example. “There's a difference between learning a programming language and knowing how to program,” says Culich. Instruction tends to be geared towards the former. Seeing a problem solved in an unfamiliar programming language can empower students to copy, paste, and start modifying. Where there is no need to reinvent the wheel, it can be clunky and inefficient to do so.

Students also learned that, to be effective collaborators, they had to identify the resources available to them. Data science is all over campus, you just have to know where to look. The Geospatial Information Facility (GIF), the Statistical Computing Facility (SCF), the D-Lab, the Data Lab, the Berkeley Institute for Data Science (BIDS), and yes, Stack Overflow, were all tremendously helpful. By reaching out and navigating this rich landscape of resources, students connected with smart people all over campus and beyond. Developing a taste for proactive networking and autodidacticism is, of course, a major advantage. Culich explains, “No one takes typing classes anymore. But to say, ‘I don't type’ wouldn't work now. I think we're creating habits, a culture, an ecosystem that I think will eventually be a part of the fabric of the way science is done. Data science seems chaotic and disruptive now, but best practices will emerge.”

Currently, data science is doing a bang-up job of catching what falls out of the leaky pipeline of misfit PhDs. But let’s face it. The practice of retrofitting the highly specialized skillset of your average PhD is a klugey hack. We can, and should, invest in training new data scientists, but data science courses are still in their trial-and-error phase. Student-led courses can serve as laboratories for redefining the problems at hand. And Stark & Culich’s course serves as a valuable jumping-off point. At least this much is clear: we can equip students for this climate by teaching them to think for themselves, ask for what they need, and code for the long run.

When it comes to making science reproducible and transparent, as well as ditching the “will this be on the final?” attitude, further restructuring the classroom experience may help squash some of the unwanted side effects of the educational rat race’s competitive atmosphere. As far as Big Data is concerned, working collaboratively and reproducibly is non-negotiable. Without these practices in place, we cannot turn data into evidence, making all the programming prowess in the world useless.