We are living in a world of information. In a matter of seconds, I can access and transmit vast quantities of information ranging from the important (a 911 call) to the not-quite-vital (“every1 should see da @justinbieber movie” — Shaquille O’Neal via Twitter). But what is information? A satisfying answer eluded most thinkers until a single paper, published in 1948 by Claude E. Shannon, a researcher at Bell Laboratories, laid out an incredibly far-reaching framework for thinking about information, uncertainty, and communication.

By that time, engineers had already developed sophisticated ways of communicating — telegraph, telephone, Morse code, radio. Lacking, however, was a way of thinking about all of these communication systems that described them in comparable terms. How is the information from a dot of Morse code like that from a letter in a telegraph? Shannon noticed the unmistakable similarity of all communication systems — an information source draws symbols from a symbol set and transmits them to a receiver, who then decides which symbols the transmitter sent. Systems might use different symbols, like dots and dashes or letters, but they are bound by Shannon’s underlying theory.

Information and uncertainty are inextricably linked. Consider an alphabet consisting of only one letter. Any message you could possibly write using this alphabet will always end up transmitting exactly NO information. Because the receiver is always 100 percent sure of the identity of the next letter, there is no uncertainty that can be reduced, and thus, the message is information-less. Now, add just one more symbol to the alphabet and the receiver is no longer so sure. Simply adding one symbol bestows the uncertainty needed for communication. Wherever there is a reduction in uncertainty, you can be sure it was information that did it — uncertainty is the Joker to information’s Batman, and the Dark Knight can’t exist without someone to vanquish. Shannon termed his mathematical formulation for uncertainty “entropy.”

Almost as soon as Shannon proposed his theory, it was adopted to study the brain, the undisputed champion of communication. Brain cells, or neurons, are highly interconnected, sending and receiving vast numbers of messages in the form of electrical impulses, or “spikes.” Their signal starts at the infor- mation source, the world. The world is very uncertain; even if you only consider only one sense, like vision, there are a nearly endless number of things that appear before you and the brain would like to acquire information about which one it is seeing. Neuroscientists can use information theory to ask how much information about various stimuli is located in particular neurons by observing their activity and asking, do spikes in this neuron reduce my uncertainty about which stimulus is present?

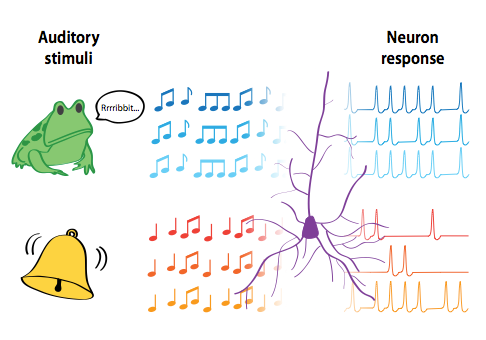

In one such experiment, scientists play sounds to a bullfrog as they record activity from its neurons. At any given time, the neuron could send one of many unique spike train “messages.” That means there is entropy, but is the neuron taking advantage of it? On one extreme, it’s possible that all of the unique spike trains are just random messages that tell us nothing about the stimulus; on the other end, perhaps each spike train unambiguously tells us which stimulus was played. Where a neuron lies on this continuum is related to its information efficiency, and research has shown that it depends a great deal on the type of stimuli the frog encounters.

Credit: Amy Orsborn

Credit: Amy Orsborn

The figure shows hypothetical responses to three presentations of one frog-like and one noise stimulus. By observing the reliable responses to the frog-like sound (bluish notes), we learn that the blue sound was played. You can imagine that the response to another frog-like sound would elicit a different but consistent response (not shown). A noise stimulus (orangish notes) on the other hand, elicits unreliable responses, giving us less stimulus information. Information theory serves as the unbiased adjudicator that allows us to quantify the information and information efficiency of neural responses to the two sets of stimuli. For noise stimuli, the efficiency is low—between five and 20 percent. But for stimuli resembling natural frog calls, the efficiency is astoundingly high, approaching 100 percent, meaning nearly all of the entropy in the neural signal is being used to convey stimulus information. Work like this supports the idea that neurons don’t simply represent everything around them equally, but are exquisitely specialized to convey information about stimuli that are relevant to them, like a frog call to a frog.

To put information theory most generally, you would say something like, “Wherever you have something affecting the probability that another thing does something, you have information.” While this sounds trivial at first, the ability of information theory to detect changes of the most general nature has allowed us to use it in contexts where we ourselves are not sure what the meaning is. That is certainly true of the brain, and many more places including the genome, Wall Street (two areas area that Shannon himself tackled with his theory), and, in short, the world.

This article is part of the Spring 2011 issue.